Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Desktop & Mobile XML Sitemap Submitted But Only Desktop Sitemap Indexed On Google Search Console

-

Hi!

The Problem

We have submitted to GSC a sitemap index. Within that index there are 4 XML Sitemaps. Including one for the desktop site and one for the mobile site. The desktop sitemap has 3300 URLs, of which Google has indexed (according to GSC) 3,000 (approx). The mobile sitemap has 1,000 URLs of which Google has indexed 74 of them.

The pages are crawlable, the site structure is logical. And performing a Landing Page URL search (showing only Google/Organic source/medium) on Google Analytics I can see that hundreds of those mobile URLs are being landed on. A search on mobile for a longtail keyword from a (randomly selected) page shows a result in the SERPs for the mobile page that judging by GSC has not been indexed.

Could this be because we have recently added rel=alternate tags on our desktop pages (and of course corresponding canonical ones on mobile). Would Google then 'not index' rel=alternate page versions?

Thanks for any input on this one.

-

Hi Allison, any updates on this?

From my understanding, it is possible that Google is not indexing the mobile versions of pages if they are simply corresponding to the desktop pages (and indicated as such with the rel=alternate mobile switchboard tags). If they have that information they may simply index the desktop pages and then display the mobile URL in search results.

It is also possible that the GSC data is not accurate - if you do a 'site:' search for your mobile pages (I would try something like 'site:domain/m/' and see what shows up), does it show a higher number of mobile pages than what you're seeing in GSC?

Can you check data for your mobile rankings and see what URLs are being shown for mobile searchers? If your data is showing that mobile users are landing on these pages from search, this would indicate that they are being shown in search results, even if they're not showing up as "indexed" in GSC.

-

Apologies on the delayed reply and thank you for providing this information!

Has there been any change in this trend over the last week? I do know that subfolder mobile sites are generally not recommended by search engines. That being said, I do not feel the mobile best practice would change as a result. Does the site automatically redirect the user based on their device? If so, be sure Google is redirecting appropriately as well.

"When a website is configured to serve desktop and mobile browsers using different URLs, webmasters may want to automatically redirect users to the URL that best serves them. If your website uses automatic redirection, be sure to treat all Googlebots just like any other user-agent and redirect them appropriately."

Here is Google's documentation on best practices for mobile sites with separate URLs. I do believe the canonical and alternate tags should be left in place. It may be worth experimenting with the removal of these mobile URLs from the sitemap though I feel this is more of a redundancy issue than anything.

I would also review Google's documentation on 'Common Mobile Mistakes', perhaps there is an issue that is restricting search engines from crawling the mobile site efficiently.

Hope that helps!

-

Hi Paul and Joe

Thanks for the reply!

Responsive is definitely in the works...

In the meantime to answer:

-

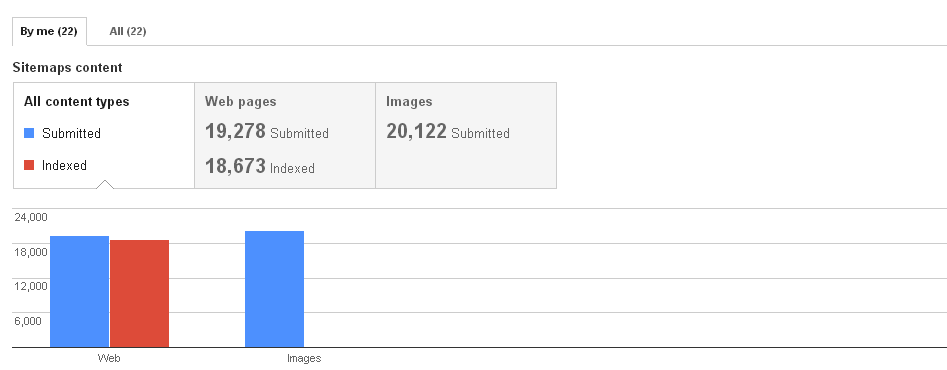

GSC is setup for the mobile site. However its not on a subdomain, its a subdirectory mobile site. So rather than m.site.com we have www.site.com/m for the mobile sites. A sitemap has been submitted and thats where I can see the data as shown in the image.

-

Because the mobile site is a subdirectory site the data becomes a little blended with the main domain data in Google Search Console. If I want to see Crawl Stats for example Google advises "To see stats and diagnostic information, view the data for (https://www.site.com/)."

-

re: "My recommendation is to remove the XML sitemap and rely on the rel=alternate/canonical tags to get the mobile pages indexed. Google's John Mueller has stated that you do not need a mobile XML sitemap file." I had read this previously, but due to the nature of the sub-directory setup of the site, the mobile sitemap became part of the sitemap index...rather than having just one large sitemap.

Thoughts?

-

-

ASs joe says - set up a separate GSC profile for the mdot subdomain. The use that to submit the mdot sitemap directly if you wish. You'll get vastly better data about the performance of the mdot site by having it split out, instead of mixed into and obfuscated by the desktop data.

Paul

-

Hi Alison,

While this is a bit late, I would recommend moving to a responsive site when/if possible. Much easier to manage, fewer issues with search engines.

My recommendation is to remove the XML sitemap and rely on the rel=alternate/canonical tags to get the mobile pages indexed. Google's John Mueller has stated that you do not need a mobile XML sitemap file.

Also, do you have Google Search Console set up for both the m. mobile site and the desktop version? It does not seem so with all sitemaps listed in the one property in your screenshot. If not, I recommend setting this up as you may receive some valuable insights into how Google is crawling the mobile site.

I'd also review Google's Common Mobile Mistakes guide to see if any of these issues could be impacting your situation. Hope this helps!

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Unsolved Google Search Console Still Reporting Errors After Fixes

Hello, I'm working on a website that was too bloated with content. We deleted many pages and set up redirects to newer pages. We also resolved an unreasonable amount of 400 errors on the site. I also removed several ancient sitemaps that listed content deleted years ago that Google was crawling. According to Moz and Screaming Frog, these errors have been resolved. We've submitted the fixes for validation in GSC, but the validation repeatedly fails. What could be going on here? How can we resolve these error in GSC.

Technical SEO | | tif-swedensky0 -

Pages are Indexed but not Cached by Google. Why?

Hello, We have magento 2 extensions website mageants.com since 1 years google every 15 days cached my all pages but suddenly last 15 days my websites pages not cached by google showing me 404 error so go search console check error but din't find any error so I have cached manually fetch and render but still most of pages have same 404 error example page : - https://www.mageants.com/free-gift-for-magento-2.html error :- http://webcache.googleusercontent.com/search?q=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&rlz=1C1CHBD_enIN803IN804&oq=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&aqs=chrome..69i57j69i58.1569j0j4&sourceid=chrome&ie=UTF-8 so have any one solutions for this issues

Technical SEO | | vikrantrathore0 -

Indexed, but not shown in search result

Hi all We face this problem for www.residentiebosrand.be, which is well programmed, added to Google Search Console and indexed. Web pages are shown in Google for site:www.residentiebosrand.be. Website has been online for 7 weeks, but still no search results. Could you guys look at the update below? Thanks!

Technical SEO | | conversal0 -

If I'm using a compressed sitemap (sitemap.xml.gz) that's the URL that gets submitted to webmaster tools, correct?

I just want to verify that if a compressed sitemap file is being used, then the URL that gets submitted to Google, Bing, etc and the URL that's used in the robots.txt indicates that it's a compressed file. For example, "sitemap.xml.gz" -- thanks!

Technical SEO | | jgresalfi0 -

Image Indexing Issue by Google

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

CDN Being Crawled and Indexed by Google

I'm doing a SEO site audit, and I've discovered that the site uses a Content Delivery Network (CDN) that's being crawled and indexed by Google. There are two sub-domains from the CDN that are being crawled and indexed. A small number of organic search visitors have come through these two sub domains. So the CDN based content is out-ranking the root domain, in a small number of cases. It's a huge duplicate content issue (tens of thousands of URLs being crawled) - what's the best way to prevent the crawling and indexing of a CDN like this? Exclude via robots.txt? Additionally, the use of relative canonical tags (instead of absolute) appear to be contributing to this problem as well. As I understand it, these canonical tags are telling the SEs that each sub domain is the "home" of the content/URL. Thanks! Scott

Technical SEO | | Scott-Thomas0 -

How to push down outdated images in Google image search

When you do a Google image search for one of my client's products, you see a lot of first-generation hardware (the product is now in its third generation). The client wants to know what they can do to push those images down so that current product images rise to the top. FYI: the client's own image files on their site aren't very well optimized with keywords. My thinking is to have the client optimize their own images and the ones they give to the media with relevant keywords in file names, alt text, etc. Eventually, this should help push down the outdated images is my thinking. Any other suggestions? Thanks so much.

Technical SEO | | jimmartin_zoho.com0 -

Does Google index XML files?

Does Google or other search engines include XML files in their index? More specifically, I am wondering how Google knows the difference between an xml filetype and an RSS feed.

Technical SEO | | nicole.healthline0