Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to Stop Google from Indexing Old Pages

-

We moved from a .php site to a java site on April 10th. It's almost 2 months later and Google continues to crawl old pages that no longer exist (225,430 Not Found Errors to be exact).

These pages no longer exist on the site and there are no internal or external links pointing to these pages.

Google has crawled the site since the go live, but continues to try and crawl these pages.

What are my next steps?

-

All my clients are impatient with Google's crawl. I think the speed of life on the web has spoiled them. Assuming your site isn't a huge e-commerce or subject-matter site...you will get crawled but not right away. Smaller, newer sites take time.

Take any concern and put it towards link building to the new site so Google's crawlers find it faster (via their seed list). Get it up on DMOZ, get that Twitter account going, post videos to Youtube, etc. Get some juicy high-PR inbound links and that could help speed up the indexing. Good luck!

-

Like Mike said above, there still isn't enough info provided for us to give you a very clear response, but I think he is right to point out that you shouldnt really care about the extinct pages in Google's index. They should, at some point, expire.

You can specify particular URLs to remove in GWT, or your robots.txt file, but that doesn't seem the best option for you. My recommendation is to just prepare the new site in the new location, upload a good clean sitemap.xml to GWT, and let them adjust. If you have much of the same content as well, Google will know due to the page creation date which is the newer and more appropriate site. Hate to say "trust the engines" but in this case, you should.

You may also consider a rel="author" tag in your new site to help Google prioritize the new site. But really the best thing is a new site on a new domain, a nice sitemap.xml, and patience.

-

To further clear things up...

I can 301 every page from the old .php site to our new homepage (However, I'm concerned about Google's impression of our overall user experience).

Or

I can 410 every page from the old .php site (Wouldn't this tell Google to stop trying to crawl these pages? Although these pages technically still exist, they just have a different URL and directory structure. Too many to set up individual 301's tho).

Or

I can do nothing and wait for these pages to drop off of Google's radar

What is the best option?

-

After reading the further responses here I'm wondering something...

You switched to a new site, can't 301 the old pages, and have no control over the old domain... So why are you worried about pages 404ing on an unused site you don't control anymore?

Maybe I'm missing something here or not reading it right. Who does control the old domain then? Is the old domain just completely gone? Because if so, why would it matter that Google is crawling non-existent pages on a dead site and returning 404s and 500s? Why would that necessarily affect the new site?

Or is it the same site but you switched to Java from PHP? If so, wouldn't your CMS have a way of redirecting the old pages that are technically still part of your site to the newer relevant pages on the site?

I feel like I'm missing pertinent info that might make this easier to digest and offer up help.

-

Sean,

Many thanks for your response. We have submitted a new, fresh site map to Google, but it seems like it's taking them forever to digest the changes.

We've been keeping track of rankings, and they've been going down, but there are so many changes going on at once with the new site, it's hard to tell what is the primary factor for the decline.

Is there a way to send Google all of the pages that don't exist and tell them to stop looking for them?

Thanks again for your help!

-

You would need access to the domain to set up the 301. If you no longer can edit files on the old domain, then your best bet is to update Webmaster Tools with the new site info and a sitemap.xml and wait for their caches to expire and update.

Somebody can correct me on this if I'm wrong, but getting so many 404s and 500's already has probably impacted your rankings so significantly, that you may be best served to approach the whole effort as a new site. Again, without more data, I'm left making educated guesses here. And if you aren't tracking your rankings (as you asked how much it is impacting...you should be able to see), then I would let go of the old site completely and build search traffic fresh on the new domain. You'd probably generate better results in the long term by jettisoning a defunct site with so many errors.

I confess, without being able to dig into the site analytics and traffic data, I can't give direct tactical advice. However, the above is what I would certainly do. Resubmitting a fresh sitemap.xml to GWT and deleting all the info to the old site in there is probably your best option. I defer to anyone with better advice. What a tough position you are in!

-

Thanks all for the feedback.

We no longer have access to the old domain. How do we institute a 301 if we can no longer access the page?

We have over 200,000 pages throwing 404's and over 70,000 pages throwing 500 errors.

This probably doesn't look good to Google. How much is this impacting our rankings?

-

Like others have said, a 301 redirect and updating Webmaster Tools should be most of what you need to do. You didn't say if you still have access to the old domain (where the pages are still being crawled) or if you get a 404, 503, or some other error when navigating to those pages. What are you seeing or can you provide a sample URL? That may help eliminate some possibilities.

-

You should implement 301 redirects from your old pages to their new locations. It's sounds like you have a fairly large site, which means Google has tons of your old pages in its index that it is going to continue to crawl for some time. It's probably not going to impact you negatively, but if you want to get rid of the errors sooner I would throw in some 301s. \

With the 301s you'll also get any link value that the old pages may be getting from external links (I know you said there are none, but with 200K+ pages it's likely that at least one of the pages is being linked to from somewhere).

-

Have you submitted a new sitemap to Webmaster Tools? Also, you could consider 301 redirecting the pages to relevant new pages to capitalize on any link equity or ranking power they may have had before. Otherwise Google should eventually stop crawling them because they are 404. I've had a touch of success getting them to stop crawling quicker (or at least it seems quicker) by changing some 404s to 410s.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Google keeps marking different pages as duplicates

My website has many pages like this: mywebsite/company1/valuation mywebsite/company2/valuation mywebsite/company3/valuation mywebsite/company4/valuation ... These pages describe the valuation of each company. These pages were never identical but initially, I included a few generic paragraphs like what is valuation, what is a valuation model, etc... in all the pages so some parts of these pages' content were identical. Google marked many of these pages as duplicated (in Google Search Console) so I modified the content of these pages: I removed those generic paragraphs and added other information that is unique to each company. As a result, these pages are extremely different from each other now and have little similarities. Although it has been more than 1 month since I made the modification, Google still marks the majority of these pages as duplicates, even though Google has already crawled their new modified version. I wonder whether there is anything else I can do in this situation? Thanks

Technical SEO | | TuanDo96270 -

Escort directory page indexing issues

Re; escortdirectory-uk.com, escortdirectory-usa.com, escortdirectory-oz.com.au,

Technical SEO | | ZuricoDrexia

Hi, We are an escort directory with 10 years history. We have multiple locations within the following countries, UK, USA, AUS. Although many of our locations (towns and cities) index on page one of Google, just as many do not. Can anyone give us a clue as to why this may be?0 -

Google is indexing bad URLS

Hi All, The site I am working on is built on Wordpress. The plugin Revolution Slider was downloaded. While no longer utilized, it still remained on the site for some time. This plugin began creating hundreds of URLs containing nothing but code on the page. I noticed these URLs were being indexed by Google. The URLs follow the structure: www.mysite.com/wp-content/uploads/revslider/templates/this-part-changes/ I have done the following to prevent these URLs from being created & indexed: 1. Added a directive in my Htaccess to 404 all of these URLs 2. Blocked /wp-content/uploads/revslider/ in my robots.txt 3. Manually de-inedex each URL using the GSC tool 4. Deleted the plugin However, new URLs still appear in Google's index, despite being blocked by robots.txt and resolving to a 404. Can anyone suggest any next steps? I Thanks!

Technical SEO | | Tom3_150 -

How to check if an individual page is indexed by Google?

So my understanding is that you can use site: [page url without http] to check if a page is indexed by Google, is this 100% reliable though? Just recently Ive worked on a few pages that have not shown up when Ive checked them using site: but they do show up when using info: and also show their cached versions, also the rest of the site and pages above it (the url I was checking was quite deep) are indexed just fine. What does this mean? thank you p.s I do not have WMT or GA access for these sites

Technical SEO | | linklander0 -

My video sitemap is not being index by Google

Dear friends, I have a videos portal. I created a video sitemap.xml and submit in to GWT but after 20 days it has not been indexed. I have verified in bing webmaster as well. All videos are dynamically being fetched from server. My all static pages have been indexed but not videos. Please help me where am I doing the mistake. There are no separate pages for single videos. All the content is dynamically coming from server. Please help me. your answers will be more appreciated................. Thanks

Technical SEO | | docbeans0 -

Image Indexing Issue by Google

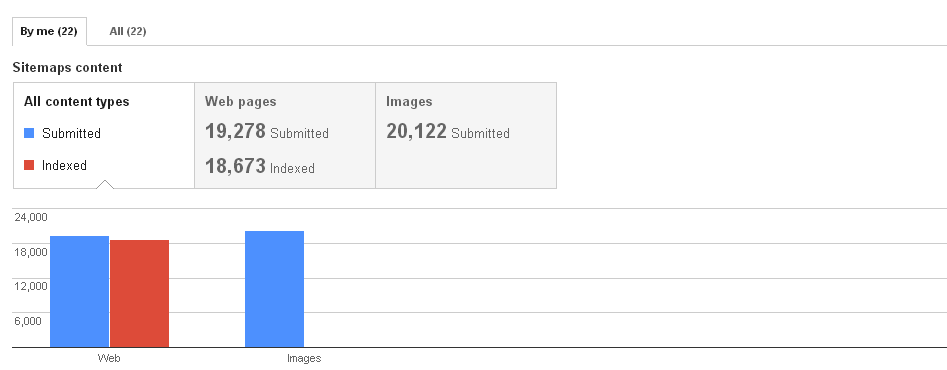

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

How Does Google's "index" find the location of pages in the "page directory" to return?

This is my understanding of how Google's search works, and I am unsure about one thing in specific: Google continuously crawls websites and stores each page it finds (let's call it "page directory") Google's "page directory" is a cache so it isn't the "live" version of the page Google has separate storage called "the index" which contains all the keywords searched. These keywords in "the index" point to the pages in the "page directory" that contain the same keywords. When someone searches a keyword, that keyword is accessed in the "index" and returns all relevant pages in the "page directory" These returned pages are given ranks based on the algorithm The one part I'm unsure of is how Google's "index" knows the location of relevant pages in the "page directory". The keyword entries in the "index" point to the "page directory" somehow. I'm thinking each page has a url in the "page directory", and the entries in the "index" contain these urls. Since Google's "page directory" is a cache, would the urls be the same as the live website (and would the keywords in the "index" point to these urls)? For example if webpage is found at wwww.website.com/page1, would the "page directory" store this page under that url in Google's cache? The reason I want to discuss this is to know the effects of changing a pages url by understanding how the search process works better.

Technical SEO | | reidsteven750 -

Why google index my IP URL

hi guys, a question please. if site:112.65.247.14 , you can see google index our website IP address, this could duplicate with our darwinmarketing.com content pages. i am not quite sure why google index my IP pages while index domain pages, i understand this could because of backlink, internal link and etc, but i don't see obvious issues there, also i have submit request to google team to remove ip address index, but seems no luck. Please do you have any other suggestion on this? i was trying to do change of address setting in Google Webmaster Tools, but didn't allow as it said "Restricted to root level domains only", any ideas? Thank you! boson

Technical SEO | | DarwinChinaSEO0