Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

PDF for link building - avoiding duplicate content

-

Hello,

We've got an article that we're turning into a PDF. Both the article and the PDF will be on our site. This PDF is a good, thorough piece of content on how to choose a product.

We're going to strip out all of the links to our in the article and create this PDF so that it will be good for people to reference and even print. Then we're going to do link building through outreach since people will find the article and PDF useful.

My question is, how do I use rel="canonical" to make sure that the article and PDF aren't duplicate content?

Thanks.

-

Hey Bob

I think you should forget about any kind of perceived conventions and have whatever you think works best for your users and goals.

Again, look at unbounce, that is a custom landing page with a homepage link (to share the love) but not the general site navigation.

They also have a footer to do a bit more link love but really, do what works for you.

Forget conventions - do what works!

Hope that helps

Marcus -

I see, thanks! I think it's important not to have the ecommerce navigation on the page promoting the pdf. What would you say is ideal as far as the graphical and navigation components of the page with the PDF on it - what kind of navigation and graphical header should I have on it?

-

Yep, check the HTTP headers with webbug or there are a bunch of browser plugins that will let you see the headers for the document.

That said, I would push to drive the links to the page though rather than the document itself and just create a nice page that houses the document and make that the link target.

You could even make the PDF link only available by email once they have singed up or some such as canonical is only a directive and you would still be better getting those links flooding into a real page on the site.

You could even offer up some HTML to make this easier for folks to link to that linked to your main page. If you take a look at any savvy infographics etc folks will try to draw a link into a page rather than the image itself for the very same reasons.

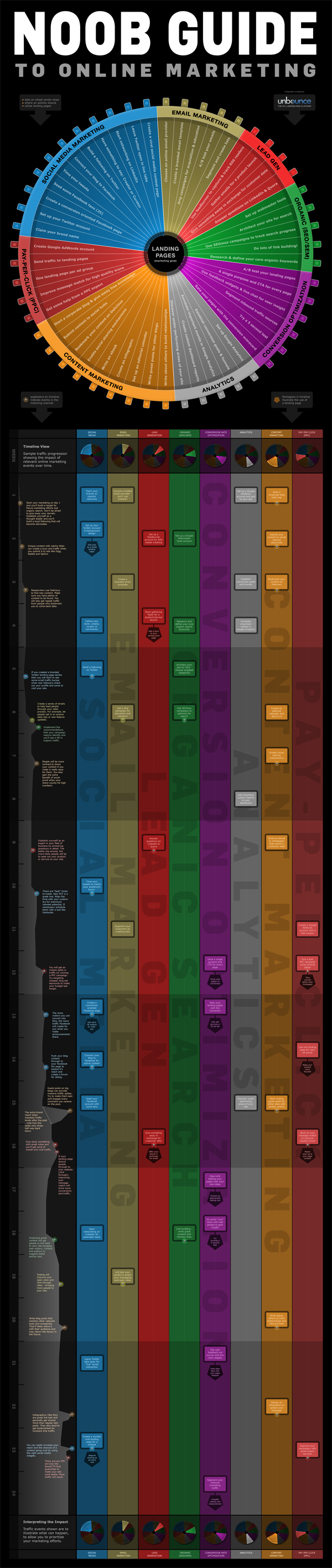

If you look at something like the Noobs Guide to Online Marketing from Unbounce then you will see something like this as the suggested linking code:

[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

[

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

Unbounce – The DIY Landing Page Platform

So, the image is there but the link they are pimping is a standard page:

http://unbounce.com/noob-guide-to-online-marketing-infographic/

They also cheekily add an extra homepage link in as well with some keywords and the brand so if folks don't remove that they still get that benefit.

Ultimately, it means that when links flood into the site they benefit the whole site rather than just promote one PDF.

Just my tuppence!

Marcus -

Thanks for the code Marcus.

Actually, the pdf is what people will be linking to. It's a guide for websites. I think the PDF will be much easier to promote than the article.I assume so anyway.

Is there a way to make sure my canonical code in htaccess is working after I insert the code?

Thanks again,

Bob

-

Hey Bob

There is a much easier way to do this and simply have your PDFs that you don't want indexed in a folder that you block access to in robots.txt. This way you can just drop PDFs into articles and link to them knowing full well these pages will not be indexed.

Assuming you had a PDF called article.pdf in a folder called pdfs/ then the following would prevent indexation.

User-agent: * Disallow: /pdfs/

Or to just block the file itself:

User-agent: *

Disallow: /pdfs/yourfile.pdf Additionally, There is no reason not to add the canonical link as well and if you find people are linking directly to the PDF then having this would ensure that the equity associated with those links was correctly attributed to the parent page (always a good thing).Header add Link '<http: www.url.co.uk="" pdfs="" article.html="">; </http:> rel="canonical"'

Generally, there are better ways to block indexation than with robots.txt but in the case of PDFs, we really don't want these files indexed as they make for such poor landing pages (no navigation) and we certainly want to remove any competition or duplication between the page and the PDF so in this case, it makes for a quick, painless and suitable solution.

Hope that helps!

Marcus -

Thanks ThompsonPaul,

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.html

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.html="">; rel="canonical""</http:>

-

You can insert the canonical header link using your site's .htaccess file, Bob. I'm sure Hostgator provides access to the htaccess file through ftp (sometimes you have to turn on "show hidden files") or through the file manager built into your cPanel.

Check tip #2 in this recent SEOMoz blog article for specifics:

seomoz.org/blog/htaccess-file-snippets-for-seosJust remember too - you will want to do the same kind of on-page optimization for the PDF as you do for regular pages.

- Give it a good, descriptive, keyword-appropriate, dash-separated file name. (essential for usability as well, since it will become the title of the icon when saved to someone's desktop)

- Fill out the metadata for the PDF, especially the Title and Description. In Acrobat it's under File -> Properties -> Description tab (to get the meta-description itself, you'll need to click on the Additional Metadata button)

I'd be tempted to build the links to the html page as much as possible as those will directly help ranking, unlike the PDF's inbound links which will have to pass their link juice through the canonical, assuming you're using it. Plus, the visitor will get a preview of the PDF's content and context from the rest of your site which which may increase trust and engender further engagement..

Your comment about links in the PDF got kind of muddled, but you'll definitely want to make certain there are good links and calls to action back to your website within the PDF - preferably on each page. Otherwise there's no clear "next step" for users reading the PDF back to a purchase on your site. Make sure to put Analytics tracking tags on these links so you can assess the value of traffic generated back from the PDF - otherwise the traffic will just appear as Direct in your Analytics.

Hope that all helps;

Paul

-

Can I just use htaccess?

See here: http://www.seomoz.org/blog/how-to-advanced-relcanonical-http-headers

We only have one pdf like this right now and we plan to have no more than five.

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.pdf

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.pdf="">; rel="canonical""</http:>

-

How do I know if I can do an HTTP header request? I'm using shared hosting through hostgator.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

Thank you DoRM,

I assume that the PDF is what I want to be the main version since that is what I'll be marketing, but I could be wrong? What if I get backlinks to both pages, will both sets of backlinks count?

-

Indicate the canonical version of a URL by responding with the

Link rel="canonical"HTTP header. Addingrel="canonical"to theheadsection of a page is useful for HTML content, but it can't be used for PDFs and other file types indexed by Google Web Search. In these cases you can indicate a canonical URL by responding with theLink rel="canonical"HTTP header, like this (note that to use this option, you'll need to be able to configure your server):Link: <http: www.example.com="" downloads="" white-paper.pdf="">; rel="canonical"</http:>Google currently supports these link header elements for Web Search only.

You can read more her http://support.google.com/webmasters/bin/answer.py?hl=en&answer=139394

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

SEM Rush & Duplicate content

Hi SEMRush is flagging these pages as having duplicate content, but we have rel = next etc implemented: https://www.key.co.uk/en/key/brand/bott https://www.key.co.uk/en/key/brand/bott?page=2 Or is it being flagged as they're just really similar pages?

Intermediate & Advanced SEO | | BeckyKey0 -

Duplicate content on URL trailing slash

Hello, Some time ago, we accidentally made changes to our site which modified the way urls in links are generated. At once, trailing slashes were added to many urls (only in links). Links that used to send to

Intermediate & Advanced SEO | | yacpro13

example.com/webpage.html Were now linking to

example.com/webpage.html/ Urls in the xml sitemap remained unchanged (no trailing slash). We started noticing duplicate content (because our site renders the same page with or without the trailing shash). We corrected the problematic php url function so that now, all links on the site link to a url without trailing slash. However, Google had time to index these pages. Is implementing 301 redirects required in this case?1 -

Duplicate content due to parked domains

I have a main ecommerce website with unique content and decent back links. I had few domains parked on the main website as well specific product pages. These domains had some type in traffic. Some where exact product names. So main main website www.maindomain.com had domain1.com , domain2.com parked on it. Also had domian3.com parked on www.maindomain.com/product1. This caused lot of duplicate content issues. 12 months back, all the parked domains were changed to 301 redirects. I also added all the domains to google webmaster tools. Then removed main directory from google index. Now realize few of the additional domains are indexed and causing duplicate content. My question is what other steps can I take to avoid the duplicate content for my my website 1. Provide change of address in Google search console. Is there any downside in providing change of address pointing to a website? Also domains pointing to a specific url , cannot provide change of address 2. Provide a remove page from google index request in Google search console. It is temporary and last 6 months. Even if the pages are removed from Google index, would google still see them duplicates? 3. Ask google to fetch each url under other domains and submit to google index. This would hopefully remove the urls under domain1.com and doamin2.com eventually due to 301 redirects. 4. Add canonical urls for all pages in the main site. so google will eventually remove content from doman1 and domain2.com due to canonical links. This wil take time for google to update their index 5. Point these domains elsewhere to remove duplicate contents eventually. But it will take time for google to update their index with new non duplicate content. Which of these options are best best to my issue and which ones are potentially dangerous? I would rather not to point these domains elsewhere. Any feedback would be greatly appreciated.

Intermediate & Advanced SEO | | ajiabs0 -

Duplicate content on recruitment website

Hi everyone, It seems that Panda 4.2 has hit some industries more than others. I just started working on a website, that has no manual action, but the organic traffic has dropped massively in the last few months. Their external linking profile seems to be fine, but I suspect usability issues, especially the duplication may be the reason. The website is a recruitment website in a specific industry only. However, they posts jobs for their clients, that can be very similar, and in the same time they can have 20 jobs with the same title and very similar job descriptions. The website currently have over 200 pages with potential duplicate content. Additionally, these jobs get posted on job portals, with the same content (Happens automatically through a feed). The questions here are: How bad would this be for the website usability, and would it be the reason the traffic went down? Is this the affect of Panda 4.2 that is still rolling What can be done to resolve these issues? Thank you in advance.

Intermediate & Advanced SEO | | iQi0 -

Removing duplicate content

Due to URL changes and parameters on our ecommerce sites, we have a massive amount of duplicate pages indexed by google, sometimes up to 5 duplicate pages with different URLs. 1. We've instituted canonical tags site wide. 2. We are using the parameters function in Webmaster Tools. 3. We are using 301 redirects on all of the obsolete URLs 4. I have had many of the pages fetched so that Google can see and index the 301s and canonicals. 5. I created HTML sitemaps with the duplicate URLs, and had Google fetch and index the sitemap so that the dupes would get crawled and deindexed. None of these seems to be terribly effective. Google is indexing pages with parameters in spite of the parameter (clicksource) being called out in GWT. Pages with obsolete URLs are indexed in spite of them having 301 redirects. Google also appears to be ignoring many of our canonical tags as well, despite the pages being identical. Any ideas on how to clean up the mess?

Intermediate & Advanced SEO | | AMHC0 -

Is a different location in page title, h1 title, and meta description enough to avoid Duplicate Content concern?

I have a dynamic website which will have location-based internal pages that will have a <title>and <h1> title, and meta description tag that will include the subregion of a city. Each page also will have an 'info' section describing the generic product/service offered which will also include the name of the subregion. The 'specific product/service content will be dynamic but in some cases will be almost identical--ie subregion A may sometimes have the same specific content result as subregion B. Will the difference of just the location put in each of the above tags be enough for me to avoid a Duplicate Content concern?</p></title>

Intermediate & Advanced SEO | | couponguy0 -

Is SEOmoz.org creating duplicate content with their CDN subdomain?

Example URL: http://cdn.seomoz.org/q/help-with-getting-no-conversions Canonical is a RELATIVE link, should be an absolute link pointing to main domain: http://www.seomoz.org/q/help-with-getting-no-conversions <link href='[/q/help-with-getting-no-conversions](view-source:http://cdn.seomoz.org/q/help-with-getting-no-conversions)' rel='<a class="attribute-value">canonical</a>' /> 13,400 pages indexed in Google under cdn subdomain go to google > site:http://cdn.seomoz.org https://www.google.com/#hl=en&output=search&sclient=psy-ab&q=site:http%3A%2F%2Fcdn.seomoz.org%2F&oq=site:http%3A%2F%2Fcdn.seomoz.org%2F&gs_l=hp.2...986.6227.0.6258.28.14.0.0.0.5.344.3526.2-10j2.12.0.les%3B..0.0...1c.Uprw7ko7jnU&pbx=1&bav=on.2,or.r_gc.r_pw.r_cp.r_qf.&fp=97577626a0fb6a97&biw=1920&bih=936

Intermediate & Advanced SEO | | irvingw1 -

Link Building Ideas for a health site

Hi, I am trying to rank a health related website. This is the url: www.ridpiles.com Domain age is 1 year 6 months. Done Directory submissions Blog Comments + Forum posts Done Social Bookmarks Article submissions (Not much) I have done competitor analysis. All of my competitors are just had links from directories and some link exchanges. They got links from quality sites like Yahoo dir. I know my site is far better than my competitors and has 100% unique content. I have submitted to yahoo directory inclusion, but still no luck i hadn't accepted into it. I am planning to go for a sponsered review but dont know, weather the link will be valuable for that much of money. I was left with Guest Blogging. I see this is the only option for me to build links. But i have a very tough competiton, i must compete with most reputed sites like webmd.com etc, i need to get more good links. But i cant get what other ways to get authoritative links. If Guest blogging is the only option for me, how many posts do i need to do daily? And can someone suggest me good Guest blogging sites? Anyhelp would be appreciated.

Intermediate & Advanced SEO | | Indexxess0