Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to create site map for large site (ecommerce type) that has 1000's if not 100,000 of pages.

-

I know this is kind of a newbie question but I am having an amazing amount of trouble creating a sitemap for our site Bestride.com. We just did a complete redesign (look and feel, functionality, the works) and now I am trying to create a site map. Most of the generators I have used "break" after reaching some number of pages. I am at a loss as to how to create the sitemap. Any help would be greatly appreciated!

Thanks

-

I agree with Chris. With such large websites it would be advisable having a sitemap index and then splitting the index into various individual indexes such as Pages, Products, Categories, images, media, tags etc.

-

The easiest thing i can think of is to write a script that works with your dispatcher to create a site map. The format I would use is add the page and all of the "product images" on the page to the map and move to the next. At the same time I would use an auto increment variable to keep track of how many lines you have written. When you get around 50k, write out the name of the next site map file that the program will create and have them chained together this way.

-

That's a great help Chris, thank you! And thanks to all for your help!

-

Typically, a sitemap is going to include every page on the site. As Francesca said, each sitemap can be up to 50K urls and if you need multiple sitemaps then you create a sitemap index that points to the rest of the sitemaps.

-

Thanks for the feedback!

I will look into screamingfrog for sure.

@Lesley - we are using a custom platform (in house) so we don't have that functionality. The issue is that we have a lot of inventory (millions) of cars. We have built (and are releasing new functionality today) to provide internal links so that Google can crawl all the inventory easily (users can too :). My question about sitemaps has boiled down to this: Do we need to build the sitemap to include every single page (all the inventory) or do we provide a "map" so that google can find the top pages and then crawl the inventory from there. Again the site is bestride.com. If anyone wants to take a look at the site, that would be fantastic!

Thanks

-

Are you using a custom platform or an off the shelf e-commerce package? Most off the shelf packages actually have a module that can create a site map and a lot have it where you can cron it too.

-

Of course, you can also use the moz's crawl test report at http://pro.moz.com/tools/crawl-test

-

Hi Kristin,

Each sitemap.xml can support maximum 50.000 URLs. So, If you have a site with more than 100K, It'd be better to create 2 or 3 o 4 etc sitemaps.xml in order to contain all URLs. Hope it is useful.

Kind regards!

Francesca

-

You can use screamingfrog to create your sitemap. You just need to license it for crawl more than 500 URI.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Sitemap.xml strategy for site with thousands of pages

I have a client that has a HUGE website with thousands of product pages. We don't currently have a sitemap.xml because it would take so much power to map the sitemap. I have thought about creating a sitemap for the key pages on the website - but didn't want to hurt the SEO on the thousands of product pages. If you have a sitemap.xml that only has some of the pages on your site - will it negatively impact the other pages, that Google has indexed - but are not listed on the sitemap.xml.

Technical SEO | | jerrico10 -

Unique page for each product variant? (Not eCommerce)

Hi Mozzers, Just looking for a little advice before I launch into a huge workload. We have landing pages for vehicle manufacturers. We then have anchor links in that page for each vehicle model that manufacturer has, with further info on the model further down the page. So we're toying with the idea of launching a unique page for each of the models rather than having them all on the same landing page. This will take an age and a minute but if it is worth it, we want to do it. Do you guys see a benefit to having unique pages for each model? Do you think it would attract more natural links? Would this help or hinder the manufacturer landing page in general? Should the manufacturer landing page be noindex so as to avoid duplicate content issues? I can see a lot of work and risk, just looking for a few opinions. PM for more info. Thanks a lot people, Jamie

Technical SEO | | SanjidaKazi0 -

Does Title Tag location in a page's source code matter?

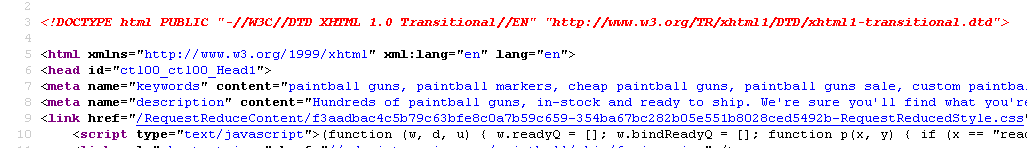

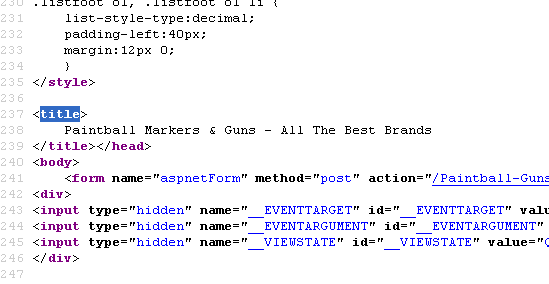

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

What is the best way to find missing alt tags on my site (site wide - not page by page)?

I am looking to find all the missing alt tags on my site at once. I have a FF extension that use to do it page by page, but my site is huge and that will take forever. Thanks!!

Technical SEO | | franchisesolutions1 -

ECommerce: Best Practice for expired product pages

I'm optimizing a pet supplies site (http://www.qualipet.ch/) and have a question about the best practice for expired product pages. We have thousands of products and hundreds of our offers just exist for a few months. Currently, when a product is no longer available, the site just returns a 404. Now I'm wondering what a better solution could be: 1. When a product disappears, a 301 redirect is established to the category page it in (i.e. leash would redirect to dog accessories). 2. After a product disappers, a customized 404 page appears, listing similar products (but the server returns a 404) I prefer solution 1, but am afraid that having hundreds of new redirects each month might look strange. But then again, returning lots of 404s to search engines is also not the best option. Do you know the best practice for large ecommerce sites where they have hundreds or even thousands of products that appear/disappear on a frequent basis? What should be done with those obsolete URLs?

Technical SEO | | zeepartner1 -

Adding 'NoIndex Meta' to Prestashop Module & Search pages.

Hi Looking for a fix for the PrestaShop platform Look for the definitive answer on how to best stop the indexing of PrestaShop modules such as "send to a friend", "Best Sellers" and site search pages. We want to be able to add a meta noindex ()to pages ending in: /search?tag=ball&p=15 or /modules/sendtoafriend/sendtoafriend-form.php We already have in the robot text: Disallow: /search.php

Technical SEO | | reallyitsme

Disallow: /modules/ (Google seems to ignore these) But as a further tool we would like to incude the noindex to all these pages too to stop duplicated pages. I assume this needs to be in either the head.tpl or the .php file of each PrestaShop module.? Or is there a general site wide code fix to put in the metadata to apply' Noindex Meta' to certain files. Current meta code here: Please reply with where to add code and what the code should be. Thanks in advance.0 -

Blocking URL's with specific parameters from Googlebot

Hi, I've discovered that Googlebot's are voting on products listed on our website and as a result are creating negative ratings by placing votes from 1 to 5 for every product. The voting function is handled using Javascript, as shown below, and the script prevents multiple votes so most products end up with a vote of 1, which translates to "poor". How do I go about using robots.txt to block a URL with specific parameters only? I'm worried that I might end up blocking the whole product listing, which would result in de-listing from Google and the loss of many highly ranked pages. DON'T want to block: http://www.mysite.com/product.php?productid=1234 WANT to block: http://www.mysite.com/product.php?mode=vote&productid=1234&vote=2 Javacript button code: onclick="javascript: document.voteform.submit();" Thanks in advance for any advice given. Regards,

Technical SEO | | aethereal

Asim0 -

Should we use Google's crawl delay setting?

We’ve been noticing a huge uptick in Google’s spidering lately, and along with it a notable worsening of render times. Yesterday, for example, Google spidered our site at a rate of 30:1 (google spider vs. organic traffic.) So in other words, for every organic page request, Google hits the site 30 times. Our render times have lengthened to an avg. of 2 seconds (and up to 2.5 seconds). Before this renewed interest Google has taken in us we were seeing closer to one second average render times, and often half of that. A year ago, the ratio of Spider to Organic was between 6:1 and 10:1. Is requesting a crawl-delay from Googlebot a viable option? Our goal would be only to reduce Googlebot traffic, and hopefully improve render times and organic traffic. Thanks, Trisha

Technical SEO | | lzhao0