If Google's index contains multiple URLs for my homepage, does that mean the canonical tag is not working?

-

I have a site which is using canonical tags on all pages, however not all duplicate versions of the homepage are 301'd due to a limitation in the hosting platform. So some site visitors get www.example.com/default.aspx while others just get www.example.com. I can see the correct canonical tag on the source code of both versions of this homepage, but when I search Google for the specific URL "www.example.com/default.aspx" I see that they've indexed that specific URL as well as the "clean" one. Is this a concern... shouldn't Google only show me the clean URL?

-

In most cases, Google does seem to "de-index" the non-canonical URL, if they process they tag. I put in quotes just because, technically, the page is still in Google's index - as soon as it's not showing up at all (including with "site:"), though, I essentially consider that to be de-indexed. If we can't see it, it might as well not be there.

If 301-ing isn't an option, I'd double-check a few things:

(1) Is the non-canonical page ranking for anything (including very long-tail terms)?

(2) Are there any internal links to the non-canonical URL? These can send a strongly mixed signal.

(3) Are there any other mixed signals that might be throwing off the canonical? Examples include canonicals on other pages that contradict this one, 301s/302s that override the canonical, etc.

-

As Digital-Diameter said, the best choice for fixing this problem is a 301. A Canonical tag can eventually lead to the incorrect URL being replaced by the correct one in the SERPs but it is also important to note that the Rel=canonical tag is a suggestion, not a directive. What this means is that the search engines will take it into consideration but may choose not to follow it.

-

Technically, rel=canonical tags can still leave a page indexed, they simply pass authority for Google. From your question I can tell you know this, but I do have to say that 301's are the best way to address this. Blocking a page with robots.txt can help as well, but this just stops Google from crawling a page, the page can still be indexed again.

If you have pages or versions of pages that you do not want indexed you may want to use the no index meta tag. Google's notes here. Be careful though, this will stop these pages from being indexed, but they will still be crawled (though your rel=canonical solution should make this a non-issue).

A few other notes:

In all cases, be sure your internal links point consistently to the URL version you have determined for your home page.

WMT also creates a list of inbound links that are missing or broken. You can use this to help determine any additional 301s that you need.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Duplicate content homepage - Google canonical 'N/A'?

Hi, I redesigned a clients website and launched it two weeks ago. Since then, I have 301 redirected all old URL's in Google's search results to their counterparts on the new site. However, none of the new pages are appearing in the search results and even the homepage has disappeared. Only old site links are appearing (even though the old website has been taken down ) and in GSC, it's stating that: Page is not indexed: Duplicate, Google chose different canonical than user However, when I try to understand how to fix the issue and see which URL it is claiming to be a duplicate of, it says: Google-selected canonical: N/A It says that the last crawl was only yesterday - how can I possibly fix it without knowing which page it says it's a duplicate of? Is this something that just takes time, or is it permanent? I would understand if it was just Google taking time to crawl the pages and index but it seems to be adamant it's not going to show any of them at all. 55.png

Technical SEO | | goliath910 -

Canonical homepage link uses trailing slash while default homepage uses no trailing slash, will this be an issue?

Hello, 1st off, let me explain my client in this case uses BigCommerce, and I don't have access to the backend like most other situations. So I have to rely on BG to handle certain issues. I'm curious if there is much of a difference using domain.com/ as the canonical url while BG currently is redirecting our domain to domain.com. I've been using domain.com/ consistently for the last 6 months, and since we switches stores on Friday, this issue has popped up and has me a bit worried that we'll loose somehow via link juice or overall indexing since this could confuse crawlers. Now some say that the domain url is fine using / or not, as per - https://moz.com/community/q/trailing-slash-and-rel-canonical But I also wanted to see what you all felt about this. What says you?

Technical SEO | | Deacyde0 -

Image Indexing Issue by Google

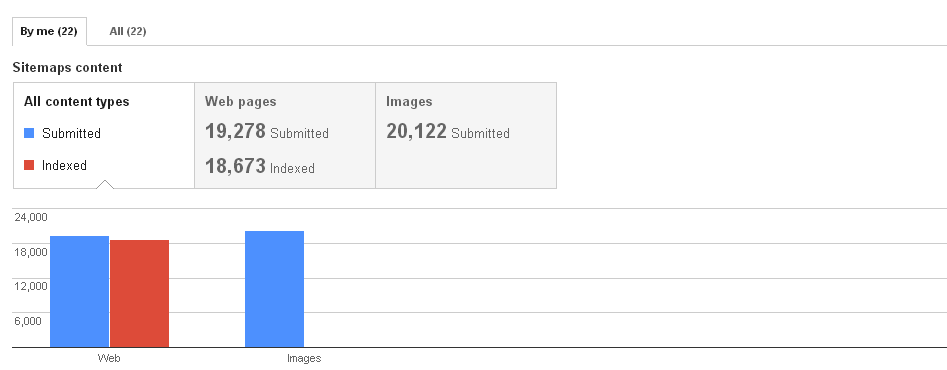

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Why are my URL's with a trailing slash still getting indexed even though they are redirected in the .htaccess file?

My .htaccess file is set up to redirect a URL with a trailing / to the URL without the /. However, my SEOmoz crawl diagnostics report is showing both URL's. I took a look at my Google Webmaster account and saw some duplicate META title issues. Same thing, Google Webmaster is showing the URL with the trailing /. My website was live for about 3 days before I added the code to the .htaccess file to remove the trailing /. Is it possible that in those 3 days that both versions were indexed and haven't been removed even though the .htaccess file has been updated?

Technical SEO | | mkhGT0 -

Multiple H1 tags on same page

Hi Mozers I have a doubt regarding H1 tags. I know H1 tags will not give some special SEO value. But is there any issue if we are using multiple H1 tags on a same page? For example on the seomoz.org blog home page I saw 16 H1 tags (seomoz.org/blog). Is that ok to use like that? Can I completely ignore all my worries about H1 tags?

Technical SEO | | riyas_heych0 -

Product landing page URL's for e-commerce sites - best practices?

Hi all I have built many e-commerce websites over the years and with each one, I learn something new and apply to the next site and so on. Lets call it continuous review and improvement! I have always structured my URL's to the product landing pages as such: mydomain.com/top-category => mydomain.com/top-category/sub-category => mydomain.com/top-category/sub-category/product-name Now this has always worked fine for me but I see more an more of the following happening: mydomain.com/top-category => mydomain.com/top-category/sub-category => mydomain.com/product-name Now I have read many believe that the longer the URL, the less SEO impact it may have and other comments saying it is better to have the just the product URL on the final page and leave out the categories for one reason or another. I could probably spend days looking around the internet for peoples opinions so I thought I would ask on SEOmoz and see what other people tend to use and maybe establish the reasons for your choices? One of the main reasons I include the categories within my final URL to the product is simply to detect if a product name exists in multiple categories on the site - I need to show the correct product to the user. I have built sites which actually have the same product name (created by the author) in multiple areas of the site but they are actually different products, not duplicate content. I therefore cannot see a way around not having the categories in the URL to help detect which product we want to show to the user. Any thoughts?

Technical SEO | | yousayjump0 -

Canonical Tag Here?

Hello, I have a client who I have taken on (different to my other client in another question), My client has a ecommerce website and in nearly all of his products (around 30-40) he has a little information checklist like.. Made in the UK

Technical SEO | | Prestige-SEO

Prices from 9.99

Top quality

Free delivery on orders over.. This is the duplicate content, what is the best practise for this as the SEOmoz crawler is giving me a multiple of errors.0 -

What's the SEO impact of url suffixes?

Is there an advantage/disadvantage to adding an .html suffix to urls in a CMS like WordPress. Plugins exist to do it, but it seems better for the user to leave it off. What do search engines prefer?

Technical SEO | | Cornucopia0