Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

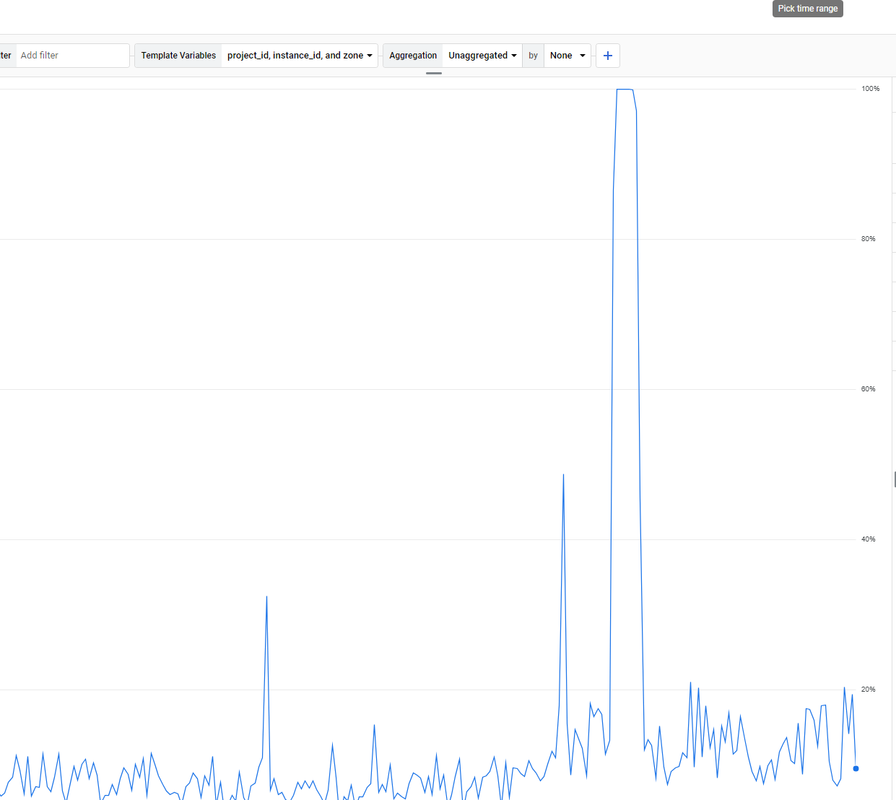

Unsolved The Moz.com bot is overloading my server

-

. How to solve it?

. How to solve it? -

For a step-by-step guide on setting up the tool, check out the solara executor download tutorial.

-

maybe crawl delay will help.

-

@paulavervo

Hi,

We do! The best way to chat with us is via our contact form or direct email. We also have chat within Moz Pro.

Please contact us via help@moz.com or https://moz.com/help/contact

We will be happy to help.

Cheers,

Kerry. -

very nice brother i like it very good keep it up !

-

very nice !

-

does the moz team even monitor this forum?

-

If the Moz.com bot is overloading your server, there are several steps you can take to manage and mitigate the issue effectively. First, you can adjust the crawl rate in your

robots.txtfile by specifying a crawl delay for the Moz bot using directives likeUser-agent: rogerbotandUser-agent: dotbot, followed byCrawl-delay: 10to make the bot wait 10 seconds between requests. If this does not suffice, you can temporarily block the bot by disallowing it in yourrobots.txtfile. Additionally, it's a good idea to contact Moz’s support team to explain the issue, as they may offer solutions to adjust the crawl rate for your site. Implementing server-side rate limiting is another effective strategy. For Apache servers, you can add rules in your.htaccessfile to return a 429 Too Many Requests status code to the Moz bots, while for Nginx servers, you can set up rate limiting in your configuration file to control the number of requests per second from a single user or IP address. Monitoring your server’s performance and log files can help identify specific patterns or peak times, allowing you to fine-tune your settings. Furthermore, using a Content Delivery Network (CDN) can help distribute the load by caching content and serving it from multiple locations, reducing the direct impact on your server caused by crawlers. By taking these steps, you can manage the load from the Moz.com bot and maintain your server’s stability and responsiveness.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Rogerbot directives in robots.txt

I feel like I spend a lot of time setting false positives in my reports to ignore. Can I prevent Rogerbot from crawling pages I don't care about with robots.txt directives? For example., I have some page types with meta noindex and it reports these to me. Theoretically, I can block Rogerbot from these with a robots,txt directive and not have to deal with false positives.

Reporting & Analytics | | awilliams_kingston0 -

520 Error from crawl report with Cloudflare

I am getting a lot of 520 Server Error in crawl reports. I see this is related to Cloudflare. We know 520 is Cloudflare so maybe the Moz team can change this from "unknown" to "Cloudflare 520". Perhaps the Moz team can update the "how to fix" section in the reporting, if they have some possible suggestions on how to avoid seeing these in the report of if there is a real issue that needs to be addressed. At this point I don't know. There must be a solution that Moz can provide like a setting in Cloudflare that will permit the Rogerbot if Cloudflare is blocking it because it does not like its behavior or something. It could be that Rogerbot is crawling my site on a bad day or at a time when we were deploying a massive site change. If I know when my site will be down can I pause Rogerbot? I found this https://developers.cloudflare.com/support/troubleshooting/general-troubleshooting/troubleshooting-crawl-errors/

Technical SEO | | awilliams_kingston0 -

Unsolved Does Moz Pro include Moz Local

My client has bought about six Moz Local accounts and are pleased with results. We have not yet used your Moz Pro program. The client might be interested in switching to the Moz Pro if those Moz Local accounts can be included into it. Please let me know as soon as possible. Thanks!

Moz Pro | | gallowaywebteam0 -

Moz crawler is not able to crawl my website

Hi, i need help regarding Moz Can't Crawl Your Site i also share screenshot that Moz was unable to crawl your site on Mar 26, 2022. Our crawler was not able to access the robots.txt file on your site. This often occurs because of a server error from the robots.txt. Although this may have been caused by a temporary outage, we recommend making sure your robots.txt file is accessible and that your network and server are working correctly. Typically errors like this should be investigated and fixed by the site webmaster.

Technical SEO | | JasonTorney

my robts.txt also ok i checked it

Here is my website https://whiskcreative.com.au

just check it please as soon as possibe0 -

Ahrefs vs Moz

Hi! I noticed the Moz DA en the Ahrefs DA are very different. Where https://www.123opzeggen.nl/ has a DA of 10 at MOZ, the DA at Ahrefs is 26. Where does this big difference come from? Do you measure in different ways? I hope you can answer this question for me. Thank you in advance!

Moz Pro | | NaomiAdivare2 -

MOZ Versus HUBSPOT??? If We Discontinue MOZ Subscription and Get HUBSPOT, Anything Lost?

How does Hubspot compare with MOZ? Doe it provide similar features or is the functionality very different? Is Hubspot complementary to MOZ or could it be used as a substitute? If we stopped our MOZ subscription and subscribed to Hubspot would we lose anything? Thanks, Alan

Moz Pro | | Kingalan10 -

Screaming frog, Xenu, Moz giving wrong results

Hello guys and gals, This is a very odd one, I've a client's website and most of the crawlers I'm using are giving me weird/ wrong results. For now lets focus on screaming frog, when I crawl the site it will list e.g. meta titles as missing (not all of them though), however going into the site the title is not missing, and Google seems to be indexing the site fine. The robots.txt are not affecting the site (I've also tried changing the user agent). The other odd thing is SF gives a 200 code but as a status tells me "connection refused" even though it's giving me data. I'm unable to share the clients site, has any one else seen this very odd issue? And solutions for it? Many thanks in advanced for any help,

Moz Pro | | GPainter0 -

How long for authority to transfer form an old page to a new page via a 301 redirect? (& Moz PA score update?)

Hi How long aproximately does G take to pass authority via a 301 from an old page to its new replacement page ? Does Moz Page Authority reflect this in its score once G has passed it ? All Best

Moz Pro | | Dan-Lawrence

Dan3