What's the best way to integrate off site inventory?

-

I can't seem to make any progress with my car dealership client in rankings or traffic. I feel like I've narrowed out most of the common problems, the only other thing I can see is that all their inventory is on a subdomain using a dedicated auto dealership software. Any suggestion of a better way to handle this situation? Am I missing something obvious? The url is rcautomotive.com

Thanks for your help!

-

All the images on the page are not on a subdomain of rcautomotive.com but a completely different domain " images.dealerfire.com ". Even the image that is used to populate social media thumbnails is from dealerfire.com. I would start with looking at moving those over to rcautomotive.com if possible

-

Hi Todd! Are you able to let Andy know your search terms? Did you get this worked out?

-

Hi Todd,

Just to check, is the site setup in terms of Google listing / local? Have you used any Structured Data on the site? Are you pulling in reviews from the likes of Yelp?

I doubt that something running on a subdomain is going to be the cause of your issues, but without delving into it, I can't be sure.

What sort of search terms are you trying to improve the rankings for?

-Andy

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

How to get into Google's Tops Stories?

Hi All, I have been doing research for a few weeks and I cannot for the life of me figure out why I cannot get my website (Racenet) into the top stories in Google. We are in Google News, have "news article" schema, have AMP pages. Our news articles also perform quite well organically and we typically dominate the Google News section. We have two main competitors (Punters and Just Horse Racing) who are both in top stories and I cannot find anything that we are doing that they aren't. Apparently the AMP "news article" schema is incorrect and that could be the reason why we aren't showing up in Google Top Stories, but I can't find anything wrong with the schema and it looks the same as our competitors. For example: https://search.google.com/structured-data/testing-tool/u/0/#url=https%3A%2F%2Fwww.racenet.com.au%2Fnews%2Fblake-shinn-booked-to-ride-doncaster-handicap-favourite-alizee-20190331%3FisAmp%3D1 Does anyone have any ideas of why I cannot get my site into Google Top Stories? Any and all help would be greatly appreciated. Thanks! 🙂

Technical SEO | | Saba.Elahi.M.0 -

Moving wordpress to it's own server

Our company wants to remove wordpress from our current windows OS server at provider 1 and move it to a new server at provider 2. Godaddy handles our DNS. I would like to have it on the same domain without masking. I would like to make a DNS entry on godaddy so that our current server and our new server can use the same URL (ie sellstuff.com). But I only want the DNS to direct traffic to our current server. The goal here is to have the new server using the same URL as the old server so nothing needs to be masked once traffic is redirected with a 301 rule in the htaccess file. But no traffic outside of the 301 rule will end up going to the new server. I would then like to edit the htaccess file on our current server to redirect to the new servers IP address when someone goes to sellstuff.com/blog. Does this make since and is it possible?

Technical SEO | | larsonElectronics0 -

What is the best way to handle Product URLs which prepopulate options?

We are currently building a new site which has the ability to pre-populate product options based on parameters in the URL. We have done this so that we can send individual product URLs to google shopping. I don't want to create lots of duplicate pages so I was wondering what you thought was the best way to handle this? My current thoughts are: 1. Sessions and Parameters

Technical SEO | | moturner

On-site product page filters populate using sessions so no parameters are required on-site but options can still be pre-populated via parameters (product?colour=blue&size=100cm) if the user reaches the site via google shopping. We could also add "noindex, follow" to the pages with parameters and a canonical tag to the page without parameters. 2. Text base Parameters

Make the parameters in to text based URLs (product/blue/100cm/) and still use "noindex, follow" meta tag and add a canonical tag to the page without parameters. I believe this is possibly the best solution as it still allows users to link to and share pre-populated pages but they won't get indexed and the link juice would still pass to the main product page. 3. Standard Parmaters

After thinking more today I am considering the best way may be the simplest. Simply using standard parameters (product?colour=blue&size=100cm) so that I can then tell google what they do in webmaster tools and also add "noindex, follow" to the pages with parameters along with the canonical tag to the page without parameters. What do you think the best way to handle this would be?0 -

Google has deindexed 40% of my site because it's having problems crawling it

Hi Last week i got my fifth email saying 'Google can't access your site'. The first one i got in early November. Since then my site has gone from almost 80k pages indexed to less than 45k pages and the number is lowering even though we post daily about 100 new articles (it's a online newspaper). The site i'm talking about is http://www.gazetaexpress.com/ We have to deal with DDoS attacks most of the time, so our server guy has implemented a firewall to protect the site from these attacks. We suspect that it's the firewall that is blocking google bots to crawl and index our site. But then things get more interesting, some parts of the site are being crawled regularly and some others not at all. If the firewall was to stop google bots from crawling the site, why some parts of the site are being crawled with no problems and others aren't? In the screenshot attached to this post you will see how Google Webmasters is reporting these errors. In this link, it says that if 'Error' status happens again you should contact Google Webmaster support because something is preventing Google to fetch the site. I used the Feedback form in Google Webmasters to report this error about two months ago but haven't heard from them. Did i use the wrong form to contact them, if yes how can i reach them and tell about my problem? If you need more details feel free to ask. I will appreciate any help. Thank you in advance C43svbv.png?1

Technical SEO | | Bajram.Kurtishaj1 -

Does Title Tag location in a page's source code matter?

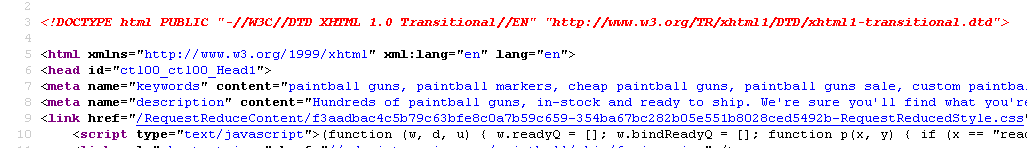

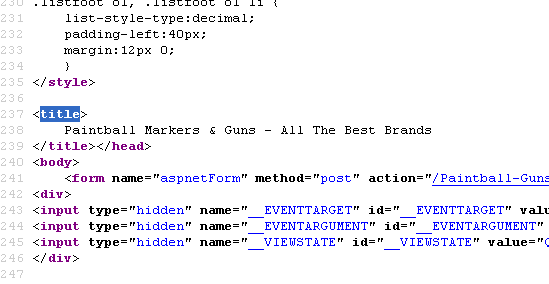

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Best way to do a site in various regions

I have a client who has 2 primary services in 4 regions He does mold removal and water damage repair. He then serves cincinnati, dayton, columbus, and indianapolis. Before hiring my company he had like 30 domains (keyword based) and had tons and tons of fake google places listings. He actually got a lot of traffic that way. However I will not tolerate that kind of stuff and want to do things the right way. First of all what is the best site approach for this. He wants a site for each service and for each city. indy mold cincy mold dayton mold dayton water etc etc etc In the end he will have 8 sites and wants to expand into other services and regions. I feel like this is not the right way to handle this as he also has another site that is more generic To me the best way to do this is a generic domain with a locations page and a page for each city. The for the Places he would get one account - an address that is hidden since he goes to customer locations, and just multiple city defined regions. He does have an office like address at each city. So should I make him a Places listing for each city or just the one? And of course how should the actual sites be organized? Thanks

Technical SEO | | webfeatseo0 -

Cantags within links affect Google's perception of them?

Hi, All! This might be really obvious, but I have little coding experience, so when in doubt - ask... One of our client site's has navigation that looks (in part) like this: <a <span="">href</a><a <span="">="http://www.mysite.com/section1"></a> <a <span="">src="images/arrow6.gif" width="13" height="7" alt="Section 1">Section 1</a><a <span=""></a> WC3 told us the tags invalidate, and while I ignored most of their comments because I didn't think it would impact on what search engines saw, because thesetags are right in the links, it raised a question. Anyone know if this is for sure a problem/not a problem? Thanks in advance! Aviva B

Technical SEO | | debi_zyx0 -

We're working on a site that is a beer company. Because it is required to have an age verification page, how should we best redirect the bots (useragents) to the actual homepage (thus skipping ahead of the age verification without allowing all browsers)?

This question is about useragents and alcohol sites that have an age verification screen upon landing on the site.

Technical SEO | | OveritMedia0