Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Google has deindexed a page it thinks is set to 'noindex', but is in fact still set to 'index'

-

A page on our WordPress powered website has had an error message thrown up in GSC to say it is included in the sitemap but set to 'noindex'. The page has also been removed from Google's search results.

Page is https://www.onlinemortgageadvisor.co.uk/bad-credit-mortgages/how-to-get-a-mortgage-with-bad-credit/

Looking at the page code, plus using Screaming Frog and Ahrefs crawlers, the page is very clearly still set to 'index'. The SEO plugin we use has not been changed to 'noindex' the page.

I have asked for it to be reindexed via GSC but I'm concerned why Google thinks this page was asked to be noindexed.

Can anyone help with this one? Has anyone seen this before, been hit with this recently, got any advice...?

-

@effectdigital and @jasongmcmahon did you ever get to the bottom of this and if so what caused it and what was the long term fix, as GSC and Google seem to behaving in a peculiar way?

We had a similar issue with this page: https://www.simplyadverse.co.uk/bad-credit-mortgage, but after several cache clears and re-indexing/fix requests it indexed fine.

We now have a page on another similar site that is stubbornly refusing to index. Its a new site and other than the a simple domain homepage, all pages when under development had "noindex " on them.

Several pages on the site on launch behaved like this with GSC saying the page was marked as "noindex" but submitted in the sitemap, but when you check to see if indexing was possible GSC says its fine (we'd removed noindex and setup the sitemap) . All crawling tools say its fine, but this page wont index despite repeated attempts over a couple of weeks, all other pages are now fine, but this page won't index: https://simplysl.co.uk/buy-to-let/

Other than they're all mortgage related sites/pages, I can't fathom why one page would be troublesome and all others index OK despite having the same setup and indexing process, any ideas?

-

Thanks, I'll take a look

-

Thanks for going into so much detail, much appreciated.

We've asked Google to reindex it and 'validate the fix', even though we can't find anything to fix!

-

Hi there, check that caching isn; the issues at server & CMS levels. Other than that reindex the page via GSC

-

This is really weird. Really really weird!

As you say, your site's source code seems to confirm that it is set to index. If we look here, we can plainly see that the coding syntax for a no-index directive is "noindex" (all one word).

Let's look at your source code:

Yep, everything seems fine there! But what if a script is modifying your source code and including the directive - and Google's picking up on that?

If we look at the modified source code which I rendered and saved to a file here:

... we can see, there are no problems here either:

Wow - that's really unhelpful!

Let's see what happens if we specifically search Google's live index for the URL:

Interestingly, when we search Google's index for this page, we get this page returned instead.

It makes sense that Google would return that URL if it couldn't return the main URL, as one is nested inside of the other. If everything was healthy, we'd see Google listing both URLs instead of just one of them. Even if you edit my index query to remove the trailing slash, you still only get the nested URL (not the one you want to be showing, which is at a slightly higher-up level)

Another thought I had was, hmm maybe this is a canonical tag gone rogue. That bore no fruit either, as this page (which you want to index, yet won't) canonicals to this page - and both of those URLs are exactly the same. As such, it's obvious that we can't blame the canonical tag either! I even viewed the modified source to see if it got altered, no dice (the canonical tag is just fine)

Maybe the XML file is telling Google not to index the URL?

Nope - that's fine too! No problems there...

Could the robots.txt file be interfering?

No! Darn it, that's not the problem

I know that a no-index or blocking directive can also be sent through the HTTP header (usually via X-robots). Let's check the response header of your URL out:

Nothing there that really raises my eyebrow. This is enabled and set to block, but to be honest that shouldn't affect Google's crawling at all. Anyone correct me if I am wrong, but defending your site against cross-site scripting (XSS) attacks doesn't impede crawling right?

Fudge it. Let's fling it through Google's Page-Speed Insights tool. Usually that will tell you if something is being blocked and why...

Nothing useful still!

Google's mobile friendly tool gives us some, semi-interesting information:

But it doesn't say the page can't be loaded. It only says some resources which the page pulls in can't be loaded! And guess what? They're all external things on other websites (other than a few theme related bits, but nothing IMO that should stop the whole page loading).

Let's try DeepCrawl's indexability checker (they make amazing software by the way... expensive though):

Sir... there is NO GOOD REASON why your URL shouldn't be indexed. I am 99.9% certain you have encountered a legit Google bug. Post about it here. Only Google can help you at this juncture

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Does Page speed matter for google ranking?

We are not sure that page does matter or not for google ranking as I am working for this keyword "flower delivery in Bangalore" from last few months and I saw some website's google first page who have low page speed but still ranking so I am really worried about my page that has also low page speed. will my Bangalore page rank on google the first page if the speed is low and kindly suggest me more tips for the ranking best factors which really works in 2020 and one more thing that domain authority really matters in this year? as I also saw some websites with low domain authority and ranking on google's first page. Home page: Flowerportal Bangalore page: https://flowerportal.in/flower-delivery/bangalore/ focus Keyword is: Flower delivery in Bangalore, send flowers to Bangalore

Technical SEO | | vidi34231 -

Pages are Indexed but not Cached by Google. Why?

Hello, We have magento 2 extensions website mageants.com since 1 years google every 15 days cached my all pages but suddenly last 15 days my websites pages not cached by google showing me 404 error so go search console check error but din't find any error so I have cached manually fetch and render but still most of pages have same 404 error example page : - https://www.mageants.com/free-gift-for-magento-2.html error :- http://webcache.googleusercontent.com/search?q=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&rlz=1C1CHBD_enIN803IN804&oq=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&aqs=chrome..69i57j69i58.1569j0j4&sourceid=chrome&ie=UTF-8 so have any one solutions for this issues

Technical SEO | | vikrantrathore0 -

Image Indexing Issue by Google

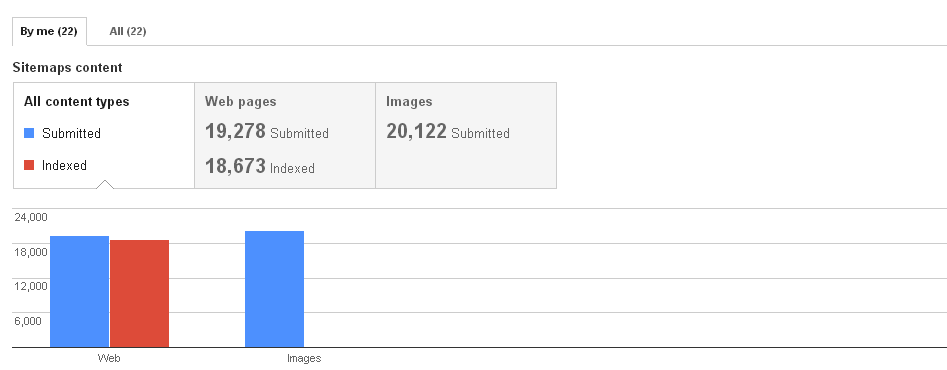

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

How to Remove /feed URLs from Google's Index

Hey everyone, I have an issue with RSS /feed URLs being indexed by Google for some of our Wordpress sites. Have a look at this Google query, and click to show omitted search results. You'll see we have 500+ /feed URLs indexed by Google, for our many category pages/etc. Here is one of the example URLs: http://www.howdesign.com/design-creativity/fonts-typography/letterforms/attachment/gilhelveticatrade/feed/. Based on this content/code of the XML page, it looks like Wordpress is generating these: <generator>http://wordpress.org/?v=3.5.2</generator> Any idea how to get them out of Google's index without 301 redirecting them? We need the Wordpress-generated RSS feeds to work for various uses. My first two thoughts are trying to work with our Development team to see if we can get a "noindex" meta robots tag on the pages, by they are dynamically-generated pages...so I'm not sure if that will be possible. Or, perhaps we can add a "feed" paramater to GWT "URL Parameters" section...but I don't want to limit Google from crawling these again...I figure I need Google to crawl them and see some code that says to get the pages out of their index...and THEN not crawl the pages anymore. I don't think the "Remove URL" feature in GWT will work, since that tool only removes URLs from the search results, not the actual Google index. FWIW, this site is using the Yoast plugin. We set every page type to "noindex" except for the homepage, Posts, Pages and Categories. We have other sites on Yoast that do not have any /feed URLs indexed by Google at all. Side note, the /robots.txt file was previously blocking crawling of the /feed URLs on this site, which is why you'll see that note in the Google SERPs when you click on the query link given in the first paragraph.

Technical SEO | | M_D_Golden_Peak0 -

CDN Being Crawled and Indexed by Google

I'm doing a SEO site audit, and I've discovered that the site uses a Content Delivery Network (CDN) that's being crawled and indexed by Google. There are two sub-domains from the CDN that are being crawled and indexed. A small number of organic search visitors have come through these two sub domains. So the CDN based content is out-ranking the root domain, in a small number of cases. It's a huge duplicate content issue (tens of thousands of URLs being crawled) - what's the best way to prevent the crawling and indexing of a CDN like this? Exclude via robots.txt? Additionally, the use of relative canonical tags (instead of absolute) appear to be contributing to this problem as well. As I understand it, these canonical tags are telling the SEs that each sub domain is the "home" of the content/URL. Thanks! Scott

Technical SEO | | Scott-Thomas0 -

Should i Noindex my privacy policy page?:

Hi, We have a privacy policy page but it can be found at Copyscape and might affect Google Panda content farming. My questions is, should i Noindex my private policy page?:

Technical SEO | | chanel270 -

Adding 'NoIndex Meta' to Prestashop Module & Search pages.

Hi Looking for a fix for the PrestaShop platform Look for the definitive answer on how to best stop the indexing of PrestaShop modules such as "send to a friend", "Best Sellers" and site search pages. We want to be able to add a meta noindex ()to pages ending in: /search?tag=ball&p=15 or /modules/sendtoafriend/sendtoafriend-form.php We already have in the robot text: Disallow: /search.php

Technical SEO | | reallyitsme

Disallow: /modules/ (Google seems to ignore these) But as a further tool we would like to incude the noindex to all these pages too to stop duplicated pages. I assume this needs to be in either the head.tpl or the .php file of each PrestaShop module.? Or is there a general site wide code fix to put in the metadata to apply' Noindex Meta' to certain files. Current meta code here: Please reply with where to add code and what the code should be. Thanks in advance.0 -

How to remove a sub domain from Google Index!

Hello, I have a website having many subdomains having same copy of content i think its harming my SEO for that site since abc and xyz sub domains do have same contents. Thus i require to know i have already deleted required subdomain DNS RECORDS now how to have those pages removed from Google index as well ? The DNS Records no more exists for those subdomains already.

Technical SEO | | anand20100