Correct strategy for long-tail keywords?

-

Hi,

We are selling log houses on our website. Every log house is listed as a "product", and this "product" consists of many separate parts, that are technically also products. For example a log house product consists of doors, windows, roof - and all these parts are technically also products, having their own content pages.

The question is - Should we let google index these detail pages, or should we list them as noindex?

These pages have no content, only the headline, which are great for long-tail SEO. We are probably the only manufacturer in the world who has a separate page for "log house wood beam 400x400mm". But otherwise these pages are empty.

My question is - what should we do? Should we let google index them all (we have over 3600 of them) and maybe try to insert an automatic FAQ section to every one of them to put more content on the page?

Or will 3600 low-content pages hurt our rankings? Otherwise we are ranking quite well.

Thanks, Johan

-

Thank You very much, Philipp. We will change our website according to your suggestions

Have a great week! Johan

-

Hej Johan!

Ah okay, then the answer is easier: If your visitors won't be able to see those sites, Google must not access them either.I also suggest not to list them in an XML-Sitemap since only pages with value for users should be listed there. As you asked above, IMO these 3600 low quality pages would not have a positive impact - they might even hurt you.

Focus on pushing those sites for search engines that are of value to users.

Cheers,

Phil -

Hi, Philipp!

Thank You for the response. Visitors currently do not see these options anyway, they are only in the system because of our custom pricing quote system.

I think my main question is, should we hide these pages from google or should we list them in our sitemap. Our site visitors will never see these pages, but they do exist. We can make them noindex, if google may not look well at them.

-

That amount is probably a bit too much. I guess you want to bundle your efforts more into top-hierarchy overview pages. Keep in mind that users hardly will perform specific searches for something like "log house wood beam 400x400mm"...

Usually it's a better choice to have one landing page for the product and let users customize via dropdown (to chose 400mm, 600mm, etc.). This will reduce your amount of pages and probably leads to a better user experience too.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Related Keywords: How many separate pages?

We have an attorney website. There is a practice area that our research shows many different 2-4 word length keyword queries for. The keywords are all very different, but they end up in the same kind of legal action. We're wondering whether we should write many different pages, perhaps 10, to cover all the basic different keyword categories, or whether we should just write a few pages. In the latter situation, many of the target key words would be mentioned in the text, but wouldn't get placement in a url or title tags. One basic problem is that since the keyword queries are made up of different words, but result in the same kind of legal action and applicable law, the content of the pages might be similar with the only difference being a paragraph that speaks to that specific key word. The rest of the content would be quite similar among the pages, i.e. "here is the law that applies, contact us." Also, some of the keywords, like the name of the law, would have to be repeated on all the pages.

Intermediate & Advanced SEO | | RFfed90 -

Secondary related keywords

Hello, Do my secondary related keywords need to also come out as subjects in my sentences or can they be objects / predicate. Thank you,

Intermediate & Advanced SEO | | seoanalytics1 -

Is this correct?

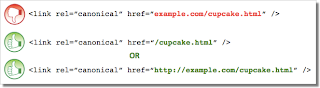

I noticed Moz using the following for its homepage Is this best practice though? The reason I ask is that, I use and I've been reading this page by Google http://googlewebmastercentral.blogspot.co.uk/2013/04/5-common-mistakes-with-relcanonical.html 5 common mistakes with rel=canonical Mistake 2: Absolute URLs mistakenly written as relative URLs

Intermediate & Advanced SEO | | Bio-RadAbs The tag, like many HTML tags, accepts both relative and absolute URLs. Relative URLs include a path “relative” to the current page. For example, “images/cupcake.png” means “from the current directory go to the “images” subdirectory, then to cupcake.png.” Absolute URLs specify the full path—including the scheme like http://.

Specifying (a relative URL since there’s no “http://”) implies that the desired canonical URL is http://example.com/example.com/cupcake.html even though that is almost certainly not what was intended. In these cases, our algorithms may ignore the specified rel=canonical. Ultimately this means that whatever you had hoped to accomplish with this rel=canonical will not come to fruition.

0

The tag, like many HTML tags, accepts both relative and absolute URLs. Relative URLs include a path “relative” to the current page. For example, “images/cupcake.png” means “from the current directory go to the “images” subdirectory, then to cupcake.png.” Absolute URLs specify the full path—including the scheme like http://.

Specifying (a relative URL since there’s no “http://”) implies that the desired canonical URL is http://example.com/example.com/cupcake.html even though that is almost certainly not what was intended. In these cases, our algorithms may ignore the specified rel=canonical. Ultimately this means that whatever you had hoped to accomplish with this rel=canonical will not come to fruition.

0 -

Is it alright to repeat a keyword in the title tag?

I know at first glance, the answer to this is a resounding NO, that it can be construed as keyword stuffing,

Intermediate & Advanced SEO | | MIGandCo

but please hear me out. I am working on optimizing a client's website and although MOST of the title tags

can be optimized without repeating a keyword, occasionally I run into one where it doesn't read right if I

don't repeat the keyword. Here's an example: Current title:

Photoshop on the Cloud | Adobe Photoshop Webinars | Company Name What I am considering using as the optimized title:

Adobe Photoshop on the Cloud | Adobe Photoshop Webinars | Company Name Yes, I know both titles are longer than recommended. In both instances, only the company name gets

truncated so I am not too worried about that. So I guess what I want to know is this: Am I right in my original assumption that it is NEVER okay to

repeat keywords in a title tag or is it alright when it makes sense to do so?0 -

Is it a good strategy to create pages that are specific to different keywords to rank higher in SEO?

We have a main website and a local website. Would it be a right strategy to create new pages on the local website specific to rank for certain keywords in the non-branded space? Is creating new pages to rank for keywords the right approach? I

Intermediate & Advanced SEO | | FedExLocal0 -

SEO Audit Strategy For A Complex Website?

I am looking for a list of SEO audit tools and strategies for a complex website. The things I am looking for include (but not limited to): finding all the subdomains of the website listing all the 301's, 302's, 404's, etc finding current canonical tags suggesting canonical tags for certain links listing / finding all current rel=nofollow's on the website listing internal links which use & don't use 'www.' finding duplicate content on additional domains owned by this website I know how to find some of the items above, but not sure if my methods are optimal and/or the most accurate. Thank you in advance for your input!

Intermediate & Advanced SEO | | CTSupp0 -

Strategies in Renaming URLs

We're renaming all of our Product URLs (because we're changing eCommerce Platforms), and I'm trying to determine the best strategy to take. Currently, they are all based on product SKUs. For example, Bacon Dental Floss is: http://www.stupid.com/fun/BFLS.html Right now, I'm thinking of just using the Product name. For example, Bacon Dental Floss would become: http://www.stupid.com/fun/bacon-dental-floss.html Is this strategy the best for SEO? Any better ideas? Thanks!

Intermediate & Advanced SEO | | JustinStupid0 -

Am I keyword stuffing my titles?

I run a site where I answer questions. As I answer each question I choose a title for the page. I have been trying to get good keywords in my titles, but now I am wondering if I have been keyword stuffing them and perhaps I should be more succinct. So, let's say I had a question about a sore back. Here would be the title tag I would use: Why is my back sore? I have spinal pain and need relief and help. | My Main Keyword That's a fictitious example, but the idea is that I would be trying to get the keywords "back", "sore", "spinal", "pain", "relief" "help" and my main website keyword into the title. As I'm writing this I'm seeing the folly in this. I think it would likely be much better to simply have a title of Why is my back sore? So, I have three questions: 1. Is it better to have a succinct title targeting one keyword/keyword phrase than to get lots of keywords in my title? 2. Should I be putting my main keyword after each of my title? Shortly after doing this on 1700+ pages I was #1 for my main keyword. But, I was also doing other things as well to boost my presence for this keyword. 3. If I decide to do more succinct titles, how would you suggest I go about running a test to see which is better? Looking forward to your responses! Thanks!

Intermediate & Advanced SEO | | MarieHaynes0