Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Does Google index internal anchors as separate pages?

-

Hi,

Back in September, I added a function that sets an anchor on each subheading (h[2-6]) and creates a Table of content that links to each of those anchors. These anchors did show up in the SERPs as JumpTo Links. Fine.

Back then I also changed the canonicals to a slightly different structur and meanwhile there was some massive increase in the number of indexed pages - WAY over the top - which has since been fixed by removing (410) a complete section of the site. However ... there are still ~34.000 pages indexed to what really are more like 4.000 plus (all properly canonicalised). Naturally I am wondering, what google thinks it is indexing. The number is just way of and quite inexplainable.

So I was wondering:

Does Google save JumpTo links as unique pages?

Also, does anybody know any method of actually getting all the pages in the google index? (Not actually existing sites via Screaming Frog etc, but actual pages in the index - all methods I found sadly do not work.)

Finally: Does somebody have any other explanation for the incongruency in indexed vs. actual pages?

Thanks for your replies!

Nico

-

Thanks - so I have to continue the search for where a tenfold increase in indexed pages (according to Search Console) might possibly come from. Sadly, the rest of your reply misses my problem; probably I have been unclear.

The reason I was asking for a method to know what pages ARE indexed is: I seem to have no problem getting stuff indexed (crystal-clear sitemap with dates; clear link structure &c.) but google seems over-eager and indexes more than there really is. If it is some technical problem, I'd like to fix that - but Google does not show anywhere what pages are actually indexed. There are lots of methods around - but none that I found do work as of now.

I have been well aware of JumpTo-Links, as I stated, and it works nicely. No problem at all with "not enough" indexed pages - really rather the opposite with no idea what causes it.

Regards

Nico

-

I agree with Russ that the anchors are not going to be indexed separately.... but I believe that those anchors are kickass page optimization that is second only behind the title tag. More info here.

-

1. The anchor pages aren't going to be indexed separately. If you are lucky, you might get a rich snippet from them in the SERPs, which would be nice. You can see an example of this if you search Google for "broken link building" and look at the top position.

2. Google likely has a crawl budget for sites based on a number of factors - inbound links, content uniqueness, etc. Your best bet is to make sure you have a strong link architecture, a complete and updated sitemap, and a good link profile.

3. Google can't index the whole web, nor would they want to. They just want to index pages that have a strong likelihood of ranking so they can build the best possible search engine.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Keywords are indexed on the home page

Hello everyone, For one of our websites, we have optimized for many keywords. However, it seems that every keyword is indexed on the home page, and thus not ranked properly. This occurs only on one of our many websites. I am wondering if anyone knows the cause of this issue, and how to solve it. Thank you.

Technical SEO | | Ginovdw1 -

Pages are Indexed but not Cached by Google. Why?

Hello, We have magento 2 extensions website mageants.com since 1 years google every 15 days cached my all pages but suddenly last 15 days my websites pages not cached by google showing me 404 error so go search console check error but din't find any error so I have cached manually fetch and render but still most of pages have same 404 error example page : - https://www.mageants.com/free-gift-for-magento-2.html error :- http://webcache.googleusercontent.com/search?q=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&rlz=1C1CHBD_enIN803IN804&oq=cache%3Ahttps%3A%2F%2Fwww.mageants.com%2Ffree-gift-for-magento-2.html&aqs=chrome..69i57j69i58.1569j0j4&sourceid=chrome&ie=UTF-8 so have any one solutions for this issues

Technical SEO | | vikrantrathore0 -

How to check if an individual page is indexed by Google?

So my understanding is that you can use site: [page url without http] to check if a page is indexed by Google, is this 100% reliable though? Just recently Ive worked on a few pages that have not shown up when Ive checked them using site: but they do show up when using info: and also show their cached versions, also the rest of the site and pages above it (the url I was checking was quite deep) are indexed just fine. What does this mean? thank you p.s I do not have WMT or GA access for these sites

Technical SEO | | linklander0 -

How to stop google from indexing specific sections of a page?

I'm currently trying to find a way to stop googlebot from indexing specific areas of a page, long ago Yahoo search created this tag class=”robots-nocontent” and I'm trying to see if there is a similar manner for google or if they have adopted the same tag? Any help would be much appreciated.

Technical SEO | | Iamfaramon0 -

Image Indexing Issue by Google

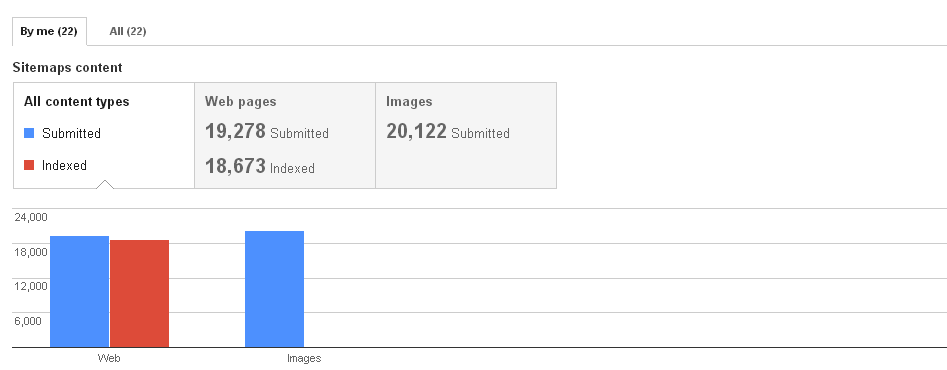

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

How to remove all sandbox test site link indexed by google?

When develop site, I have a test domain is sandbox.abc.com, this site contents are same as abc.com. But, now I search site:sandbox.abc.com and aware of content duplicate with main site abc.com My question is how to remove all this link from goolge. p/s: I have just add robots.txt to sandbox and disallow all pages. Thanks,

Technical SEO | | JohnHuynh0 -

Blank pages in Google's webcache

Hello all, Is anybody experiencing blanck page's in Google's 'Cached' view? I'm seeing just the page background and none of the content for a couple of my pages but when I click 'View Text Only' all of teh content is there. Strange! I'd love to hear if anyone else is experiencing the same. Perhaps this is something to do with the roll out of Google's updates last week?! Thanks,

Technical SEO | | A_Q

Elias0 -

Why google index my IP URL

hi guys, a question please. if site:112.65.247.14 , you can see google index our website IP address, this could duplicate with our darwinmarketing.com content pages. i am not quite sure why google index my IP pages while index domain pages, i understand this could because of backlink, internal link and etc, but i don't see obvious issues there, also i have submit request to google team to remove ip address index, but seems no luck. Please do you have any other suggestion on this? i was trying to do change of address setting in Google Webmaster Tools, but didn't allow as it said "Restricted to root level domains only", any ideas? Thank you! boson

Technical SEO | | DarwinChinaSEO0