Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Unsolved Capturing Source Dynamically for UTM Parameters

-

Does anyone have a tutorial on how to dynamically capture the referring source to be populated in UTM parameters for Google Analytics?

We want to syndicate content and be able to see all of the websites that provided referral traffic for this specific objective. We want to set a specific utm_medium and utm_campaign but have the utm_source be dynamic and capture the referring website.

If we set a permanent utm_source, it would appear the same for all incoming traffic.

Thanks in advance!

-

@peteboyd said in Capturing Source Dynamically for UTM Parameters:

Thanks in advance!

UTM (Urchin Tracking Module) parameters are tags that you can add to the end of a URL in order to track the effectiveness of your marketing campaigns. These parameters are used by Google Analytics to help you understand how users are interacting with your website and where they are coming from.

There are five different UTM parameters that you can use:

utm_source: This parameter specifies the source of the traffic, such as "google" or "Facebook".

utm_medium: This parameter specifies the medium of the traffic, such as "cpc" (cost-per-click) or "social".

utm_campaign: This parameter specifies the name of the campaign, such as "spring_sale" or "promotion".

utm_term: This parameter specifies the term or keywords used in the campaign, such as "shoes" or "dress".

utm_content: This parameter specifies the content of the ad, such as the headline or the call-to-action.

To capture the source dynamically for UTM parameters, you can use JavaScript to get the value of the document. referrer property. This property returns the URL of the page that is linked to the current page. You can then use this value to set the utm_source parameter dynamically.

For example, you might use the following code to set the utm_source parameter based on the referring URL:

Copy code

var utmSource = '';if (document.referrer.indexOf('google') !== -1) {

utmSource = 'google';

} else if (document.referrer.indexOf('facebook') !== -1) {

utmSource = 'facebook';

}// Add the utm_source parameter to the URL

var url = 'http://www.example.com?utm_source=' + utmSource;

This code will set the utm_source parameter to "google" if the user came to the page from a Google search or to "Facebook" if the user came to the page from Facebook. If the user came to the page from another source, the utm_source parameter will be left empty.You can then use this modified URL in your marketing campaigns to track the effectiveness of your campaigns and understand where your traffic is coming from.

-

@peteboyd you can refer to this tutorial: https://www.growwithom.com/2020/06/16/track-dynamic-traffic-google-tag-manager/

Should meet your requirements perfectly - using GTM to replace a static value with the url in your UTM Source.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

What's Causing My Extremely Low Bounce Rate

My client's site that is reporting an under 10% bounce rate for all sources. Direct is the highest at 8%. I'm no expert in GA but wondering if there is a problem with the analytics/tag manager code on the site. I'm especially concerned about the GTM body script being in an iframe which I read could be trouble. <!-- Google Tag Manager (noscript) -->

Reporting & Analytics | | bradsimonis

<noscript><iframe src="https://www.googletagmanager.com/ns.html?id=GTM-MWGMNW6"

height="0" width="0" style="display:none;visibility:hidden"></iframe></noscript>

<!-- End Google Tag Manager (noscript) --> You can see all the source code here:

view-source:https://nfinit.com/0 -

Solved How to solve orphan pages on a job board

Working on a website that has a job board, and over 4000 active job ads. All of these ads are listed on a single "job board" page, and don’t obviously all load at the same time. They are not linked to from anywhere else, so all tools are listing all of these job ad pages as orphans. How much of a red flag are these orphan pages? Do sites like Indeed have this same issue? Their job ads are completely dynamic, how are these pages then indexed? We use Google’s Search API to handle any expired jobs, so they are not the issue. It’s the active, but orphaned pages we are looking to solve. The site is hosted on WordPress. What is the best way to solve this issue? Just create a job category page and link to each individual job ad from there? Any simpler and perhaps more obvious solutions? What does the website structure need to be like for the problem to be solved? Would appreciate any advice you can share!

Reporting & Analytics | | Michael_M2 -

Abnormally High Direct Traffic Volume

We have abnormally high amounts of direct traffic to our site. It's comprising over half of all web traffic while organic is second with considerably less. From there the volume decreases amongst other channels. I've never seen such a huge proportion of traffic being attributed the Direct. Does anyone know how to test this or see if there is an error in Google Analytics reporting?

Reporting & Analytics | | graceflack 01 -

How google crawls images and which url shows as source?

Hi, I noticed that some websites host their images to a different url than the one their actually website is hosted but in the end google link to the one that the site is hosted. Here is an example: This is a page of a hotel in booking.com: http://www.booking.com/hotel/us/harrah-s-caesars-palace.en-gb.html When I try a search for this hotel in google images it shows up one of the images of the slideshow. When I click on the image on Google search, if I choose the Visit Page button it links to the url above but the actual image is located in a totally different url: http://r-ec.bstatic.com/images/hotel/840x460/135/13526198.jpg My question is can you host your images to one site but show it to another site and in the end google will lead to the second one?

Technical SEO | | Tz_Seo0 -

How do I deindex url parameters

Google indexed a bunch of our URL parameters. I'm worried about duplicate content. I used the URL parameter tool in webmaster to set it so future parameters don't get indexed. What can I do to remove the ones that have already been indexed? For example, Site.com/products and site.com/products?campaign=email have both been indexed as separate pages even though they are the same page. If I use a no index I'm worried about de indexing the product page. What can I do to just deindexed the URL parameter version? Thank you!

Technical SEO | | BT20090 -

Does Title Tag location in a page's source code matter?

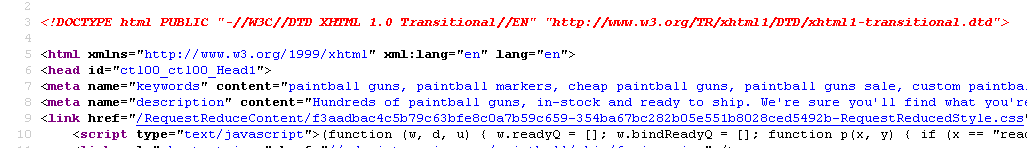

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

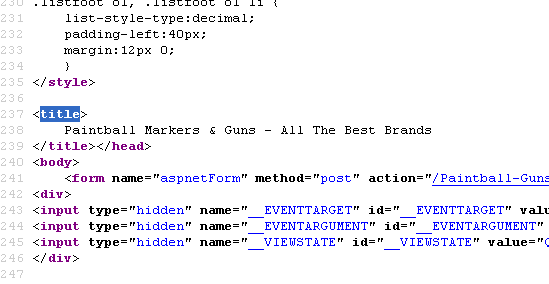

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

XML Sitemap and unwanted URL parameters

We currently don't have an XML sitemap for our site. I generated one using Screaming Frog and it looks ok, but it also contains my tracking url parameters (ref=), which I don't want Google to use, as specified in GWT. Cleaning it will require time and effort which I currently don't have. I also think that having one could help us on Bing. So my question is: Is it better to submit a "so-so" sitemap than having none at all, or the risks are just too high? Could you explain what could go wrong? Thanks !

Technical SEO | | jfmonfette0 -

Do I need to add canonical link tags to pages that I promote & track w/ UTM tags?

New to SEOmoz, loving it so far. I promote content on my site a lot and am diligent about using UTM tags to track conversions & attribute data properly. I was reading earlier about the use of link rel=canonical in the case of duplicate page content and can't find a conclusive answer whether or not I need to add the canonical tag to these pages. Do I need the canonical tag in this case? If so, can the canonical tag live in the HEAD section of the original / base page itself as well as any other URLs that call that content (that have UTM tags, etc)? Thank you.

Technical SEO | | askotzko1