Category: Technical SEO

Discuss site health, structure, and other technical SEO strategies.

-

ImportXML for twitter

Hi Mozzers, I'm trying to build a google spreadsheet to import only bios of users followers on twitters (followerwonk generates a little too much for what I need). i have tried every different importXML i can think of. Anybody have any suggestions to get it out using importXML on Google Docs. Thank in advance, Chris

| chris.kent0 -

Is My Boilerplate Product Description Causing Duplicate Content Issues?

I have an e-commerce store with 20,000+ one-of-a-kind products. We only have one of each product, and once a product is sold we will never restock it. So I really have no intention to have these product pages showing up in SERPs. Each product has a boilerplate description that the product's unique attributes (style, color, size) are plugged into. But a few sentences of the description are exactly the same across all products. Google Webmaster Tools doesn't report any duplicate content. My Moz Crawl Report show 29 of these products as having duplicate content. But a Google search using the site operator and some text from the boilerplate description turns up 16,400 product pages from my site. Could this duplicate content be hurting my SERPs for other pages on the site that I am trying to rank? As I said, I'm not concerned about ranking for these products pages. Should I make them "rel=canonical" to their respective product categories? Or use "noindex, follow" on every product? Or should I not worry about it?

| znagle0 -

Blocking Test Pages Enmasse on Sub-domain

Hello, We have thousands of test pages on a sub-domain of our site. Unfortunately at some point, these pages were visible to search engines and got indexed. Subsequently, we made a change to the robots.txt file for the test sub-domain. Gradually, over a period of a few weeks, the impressions and clicks as reported by Google Webmaster Tools fell off for the test. sub-domain. We are not able to implement the no index tag in the head section of the pages given the limitations of our CMS. Would blocking off Google bot via the firewall enmasse for all the test pages have any negative consequences for the main domain that houses the real live content for our sites (which we would like to of course remain in the Google index). Many thanks

| CeeC-Blogger0 -

Responsive design blowing out on page links?

Hi guys, We have a site about to launch with a responsive design to suit desktop, tablet and mobile. Each design carries it's own navigation as one primary set of navigational links won't suit all versions of the design. So as desktop resizes to mobile (or tablet) the desktop navigation is hidden (css) and replaced by the nav more suited to the mobile experience (hope I've explained that ok!). Problem is that if the primary navigation carries 50 links then all 3 designs together carry 150 links which is too many on page links. Is that going to be a problem? Is there a tag that can be applied to the mobile/tablet nav links? Like a canonical tag but for links?? Cheers in advance of your ideas

| lovealbatross

James0 -

How to redirect my .com/blog to my server folder /blog ?

Hello SEO Moz ! Always hard to post something serious for the 04.01 but anyway let's try ! I'm releasing Joomla websites website.com, website.com/fr, website.com/es and so on. Usually i have the following folders on my server [ROOT]/com [ROOT]/com/fr [ROOT]/com/es However I would like to get the following now (for back up and security purpose). [ROOT]/com [ROOT]/es [ROOT]/fr So now what can I do (I gues .htaccess) to open the folder [ROOT]/es when people clic on website.com/es ? It sounds stupid but I really don't know. I found this on internet but did not answer my needs. .htaccess RewriteEngine On

| AymanH

RewriteCond %{REQUEST_URI} !(^/fr/.) [NC]

RewriteRule ^(.)$ /sites/fr/$1 [L,R=301] Tks a lot ! Florian0 -

Can a CMS affect SEO?

As the title really, I run www.specialistpaintsonline.co.uk and 6 months ago when I first got it it had bad links which google had put a penalty against it so losts it value. However the penalty was lift in Sept, the site corresponds to all guidelines and seo work has been done and constantly monitored. the issue I have is sales and visits have not gone up, we are failing fast and running on 2 or 3 sales a month isn't enough to cover any sort of cost let alone wages. hence my question can the cms have anything to do with it? Im at a loss and go grey any help or advice would be great. thanks in advance.

| TeamacPaints0 -

How to delete video rich snippets?

Hi mozzaholics, At first I was very happy to have video snippets appear in Google (200 pages), but now after 6 months I see a drop of almost -30% in traffic, whilst the average rank stayed the same, compared to the star snippets I have for other pages. So I want to remove the video snippets and have the old rich snippets back. I tried removing the videositemap and google's the structured data tool doesn't show video snippets. Does anybody know what else I can do to remove the video snippets? Is there anybody who succeeded deleting them? Please share your wisdom. Ivo

| SEO_ACSI1 -

Businesses That Have 2 Trading Addresses

Hi, I have a quick question regarding Local SEO. We have a building company that operates from 2 addresses, about 70 miles apart. When we changed the address in the footer of our website to a different registered office the rankings for that local area bombed. What I would like to know is if there is a way I can properly mark up the site so that Google recognizes the same business works from 2 locations. Thanks

| denismilton0 -

Is this a Penguin hit; how to recover?

Our site is: www.kibin.com Our organic search traffic has dropped significantly in the last few days (about 50%). Although we don't have a ton of traffic coming in via organic search, this is a huge blow. We we previously the top spot for keywords like 'edit my essay', 'fix my paper', and 'paper revision'. These kw phrases linked to smaller services pages on our site such as: kibin.com/s/edit-my-essay kibin.com/s/fix-my-paper kibin.com/s/paper-revision We no longer rank for these keywords... at all. My first question/concern is... is this a Penguin penalty? I'm not sure. Honestly, I know we have some directory links, but we've never been aggressive on the backlink front. In fact, in the last few months we've been investing quite a bit into content: kibin.com/blog If this is a Penguin penalty, how should I best go about cleaning this up? I'm not even sure what links Google would be considering spammy, and again... we really don't have that extensive of a backlink profile. Please help, this is a real blow to our business and it's got me a big freaked out. Thanks!

| Kibin0 -

Website cache?

Hi mozzers, I am conducting an audit and was looking at the cache version of it. The homepage is fine but all the other pages, I get a Google 404. I don't think this is normal. Can someone tell me more what could be the issue here? thanks

| Ideas-Money-Art0 -

Does a sub-domain benefit a domain...

I have seen some mixed comment as the whether a sub-domain would benefit from the authority built up by its domain... but does a domain benefit from a sub-domain?

| Switch_Digital0 -

Internal linking disaster

Can someone help me understand what my devs have done? The site has thousands of pages but if there's an internal homepage link on all of the pages (click on the logo) shouldn't that count for internal links? Could it be because they are nonfollow? http://goo.gl/0pK5kn I've attached my competitors opensiteexplorer rankings (I'm the 2nd column) .. so despite the face the site is new you can see where I'm getting my ass kicked. Thanks! psRsQtH.png

| bradmoz0 -

Better to Remove Toxic/Low Quality Links Before Building New High Quality Links?

Recently an SEO audit from a reputable SEO firm identified almost 50% of the incoming links to my site as toxic, 40% suspicious and 5% of good quality. The SEO firm believes it imperative to remove links from the toxic domains. Should I remove toxic links before building new one? Or should we first work on building new links before removing the toxic ones? My site only has 442 subdomains with links pointing to it. I am concerned that there may be a drop in ranking if links from the toxic domains are removed before new quality ones are in place. For a bit of background my site has a MOZ Domain authority of 27, a Moz page authority of 38. It receives about 4,000 unique visitors per month through organic search. About 150 subdomains that link to my site have a Majestic SEO citation flow of zero and a Majestic SEO trust flow of zero. They are pretty low quality. However I don't know if I am better off removing them first or building new quality links before I disavow more than a third of the links to the site. Any ideas? Thanks,

| Kingalan1

Alan0 -

Toxic Link Removal

Greetings Moz Community: Recently I received an site audit from a MOZ certified SEO firm. The audit concluded that technically the site did not have major problems (unique content, good architecture). But the audit identified a high number of toxic links. Out of 1,300 links approximately 40% were classified as suspicious, 55% as toxic and 5% as healthy. After identifying the specific toxic links, the SEO firm wants to make a Google disavow request, then manually request that the links be removed, and then make final disavow request of Google for the removal of remaining bad links. They believe that they can get about 60% of the bad links removed. Only after the removal process is complete do they think it would be appropriate to start building new links. Is there a risk that this strategy will result in a drop of traffic with so many links removed (even if they are bad)? For me (and I am a novice) it would seem more prudent to build links at the same time that toxic links are being removed. According to the SEO firm, the value of the new links in the eyes of Google would be reduced if there were many toxic links to the site; that this approach would be a waste of resources. While I want to move forward efficiently I absolutely want to avoid a risk of a drop of traffic. I might add that I have not received any messages from Google regarding bad links. But my firm did engage in link building in several instances and our traffic did drop after the Penguin update of April 2012. Also, is there value in having a professional SEO firm remove the links and build new ones? Or is this something I can do on my own? I like the idea of having a pro take care of this, but the costs (Audit, coding, design, content strategy, local SEO, link removal, link building, copywriting) are really adding up. Any thoughts??? THANKS,

| Kingalan1

Alan0 -

What would be considered a bad ratio to determine Index Bloat?

I am using Annie Cushing's most excellent site audit checklist from Google Docs. My question concerns Index Bloat because it is mentioned in her "Index" tab. We have 6,595 indexed pages and only 4,226 of those pages have received 1 or more visits since January 1 2013. Is this an acceptable ratio? If not, why not and what would be an acceptable ratio? I understand the basic concept that "dissipation of link juice and constrained crawl budget can have a significant impact on SEO traffic." [Thanks to Reid Bandremer http://www.lunametrics.com/blog/2013/04/08/fifteen-minute-seo-health-check/#sr=g&m=o&cp=or&ct=-tmc&st=(opu%20qspwjefe)&ts=1385081787] If we make this an action item I'd like to have some idea how to prioritize it compared to other things that must be done. Thanks all!

| danatanseo1 -

What do I do with these back links?

In the last two weeks, I've got 10 pingbacks from this http://caraccidentlawyer.cc/coroner-ids-berkeley-bodies-who-were-killed-in-recent-car-accident/ and sites like it. The featured attorney is a competitor of ours and, since the links aren't sex/drugs/rock&roll related, (and he's linked too) I doubt this is a negative SEO campaign, but I want it to stop. These blogs are basically pure spam. Any suggestions?

| KempRugeLawGroup1 -

Deindexed site - is it best to start over?

A potential client's website has been deindexed from Google. We'd be completely redesigning his site with all new content. Would it be best to purchase a new url and redirect the old deindexed site to the new one, or try stick with the old domain?

| WillWatrous0 -

Website Redesign / Switching CMS / .aspx and .html extensions question

Hello everyone, We're currently preparing a website redesign for one of our important websites. It is our most important website, having good rankings and a lot of visitors from Search Engines, so we want to be really careful with the redesign. Our strategy is to keep as much in place as possible. At first, we are only changing the styling of the website, we will keep the content, the structure, and as much as URLs the same as possible. However, we are switching from a custom build CMS system which created URLs like www.homepage.com/default-en.aspx

| NielsB

No we would like to keep this URL the same , but our new CMS system does not support this kind of URLs. The same with for instance the URL: www.homepage.com/products.html

We're not able to recreate this URL in our new CMS. What would be the best strategy for SEO? Keep the URLs like this:

www.homepage.com/default-en

www.homepage.com/products Or doesn't it really matter, since Google we view these as completely different URLs? And, what would the impact of this changes in URLs be? Thanks a lot in advance! Best Regards, Jorg1 -

SEO-optimized Urls for Japan: English or Japanese Characters

Hi, Anyone got experience with Japanese Urls? I'm currently working on the relaunch of the Japanese site of the troteclaser.com and I wonder if we should use English or Japanese characters for the Urls. I found some topics on the forums about this, but they only tell you that Google can crawl both without problems. The question is if there is a benefit if Japanese characters are used.

| Troteclaser1 -

Effect of temporary subdomains on the root domain

Hi all, A client of ours tends to have a number of offers and competitions during the year and would like to host these competitions on separate sub-domains. Once a competition is over (generally these last for approximately a month) the sub-domain gets deleted and pointed back to the main site. I was wondering whether this would have any effects on the root domain. Can I get your opinion please?

| ICON_Malta0 -

Are similar title tags frowned upon by search engines?

We are a B2B company that is looking to convert to one global portal very soon. It is only then that we will be able to address a lot of the IA SEO issues we are currently facing. However, we are looking to make some quick fixes, namely adding a proper title to the different country homepages. Will having the same title, with only the country modifier swapped out be a good tactic? Since we want a unified title across all country sites, it just makes sense that we don't change it for every single country. For example: Small Business Solutions for B2B Marketers | Company USA Small Business Solutions for B2B Marketers | Company Italy Small Business Solutions for B2B Marketers | France

| marshseo0 -

Duplicate website with http & https

I have a website that only in a specific state in the USA we had to add a certificate for it to appear with https. my question is how to prevent from the website to be penalized on duplicate content with the http version on that specific state. please advise. thanks!

| taly0 -

Has anyone used prbuzz.com for pr distribution?

Has anyone used prbuzz.com for pr distribution in an effort to boost SEO? I am attracted to their unlimited distribution for 1 price for the year. Does anyone have an opinion and or suggestion of another company. Thanks!

| entourage2120 -

Should I get a unique IP?

I have a couple sites hosted in a VPS that share the same IP. Should I assign each site a unique IP? Would Google affect my rankings for a sudden IP change?

| rlopes5280 -

Blocking Affiliate Links via robots.txt

Hi, I work with a client who has a large affiliate network pointing to their domain which is a large part of their inbound marketing strategy. All of these links point to a subdomain of affiliates.example.com, which then redirects the links through a 301 redirect to the relevant target page for the link. These links have been showing up in Webmaster Tools as top linking domains and also in the latest downloaded links reports. To follow guidelines and ensure that these links aren't counted by Google for either positive or negative impact on the site, we have added a block on the robots.txt of the affiliates.example.com subdomain, blocking search engines from crawling the full subddomain. The robots.txt file is the following code: User-agent: * Disallow: / We have authenticated the subdomain with Google Webmaster Tools and made certain that Google can reach and read the robots.txt file. We know they are being blocked from reading the affiliates subdomain. However, we added this affiliates subdomain block a few weeks ago to the robots.txt, but links are still showing up in the latest downloads report as first being discovered after we added the block. It's been a few weeks already, and we want to make sure that the block was implemented properly and that these links aren't being used to negatively impact the site. Any suggestions or clarification would be helpful - if the subdomain is being blocked for the search engines, why are the search engines following the links and reporting them in the www.example.com subdomain GWMT account as latest links. And if the block is implemented properly, will the total number of links pointing to our site as reported in the links to your site section be reduced, or does this not have an impact on that figure?From a development standpoint, it's a much easier fix for us to adjust the robots.txt file than to change the affiliate linking connection from a 301 to a 302, which is why we decided to go with this option.Any help you can offer will be greatly appreciated.Thanks,Mark

| Mark_Ginsberg0 -

Are nofollow, noindex meta tags healthy for this particular situation?

Hi mozzers, I am conducting an audit for a client and found over 200 instances using meta tags(nofollow, noindex). These were implemented essentially on Blog pages/category pages, tags not blog posts which is a good sign. I believe that tagged URLs aren't something to worry about since these create tons of duplicates. In regards to the category page, i feel that it falls in the same basket as tags but I am not positive about it. Can someone tell me if these are fine to have noindex, nofollow? Also on the website subnav which are related to the category pages mentioned prev, the webmaster have implemented noindex,follow(screenshot below), which seems ok to me? am i right? Thanks 8egLLbo.png?1

| Ideas-Money-Art0 -

Is using JavaScript injected text in line with best practice on making blocks of text non-crawlable?

I have an ecommerce website that has common text on all the product pages, e.g. delivery and returns information. Is it ok to use non-crawlable JavaScript injected text as a method to make this content invisible to search engines? Or is this method frowned upon by Google? By way of background info - I'm concerned about duplicate/thin content, so want to tackle this by reducing this 'common text' as well as boosting unique content on these pages. Any advice would be much appreciated.

| Coraltoes770 -

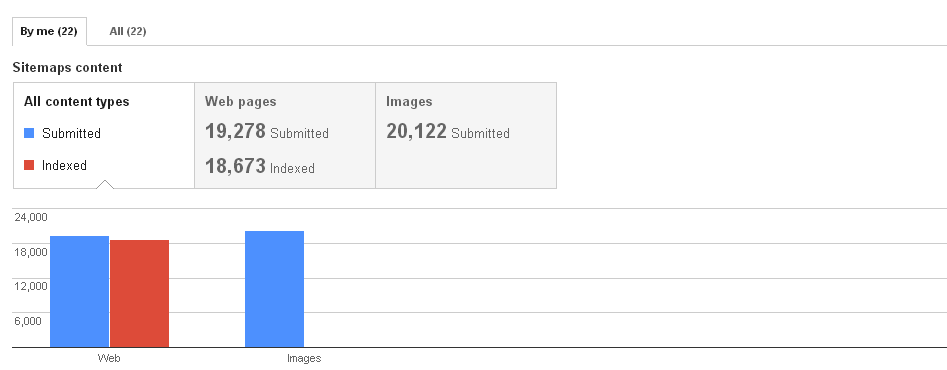

Image Indexing Issue by Google

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

| CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

SEO Ramifications of migrating traditional e-commerce store to a platform based service

Hi I'm thinking of migrating my 11 year old store to a hosted platform based e-commerce provider such as Shopify or Amazon hosted solutions etc etc I'm worried though that will lose my domains history and authority if i do so Can anyone advise if this is likely or will be same as a 301 redirect etc etc and should be fine ? All Best Dan

| Dan-Lawrence0 -

301 Redirect - Technical Question

I have recently updated a site and for the url's that had changed or were not transferring I set up 301 redirects in the htaccess file as follows This one works - Redirect 301 /industry-sectors http://www.tornadowire.co.uk/fencing But this one doesn't - Redirect 301 /industry-sectors/equine http://www.tornadowire.co.uk/fencing/application/equestrian/ What it does is change the url to this instead http://www.tornadowire.co.uk/fencing/equine ..... which returns a 404 page not found error The server is nginx based server and we have moved from a joomal platform to a wordpress platform I would be grateful for any ideas

| paulie650 -

How to Let Google Know I am a new Site Owner and to Remove or De-value all backlinks?

I am looking to buy a new domain for a brand. Problem is the domain has was registered in 1996 and has around 6k backlinks (according to ahrefs) that I need removed as the old content will have no relevance to my new site. Should I just disavow all of them? Is there anything "special" I can do to let Google know that it will be new site owner/content and to remove/discount the current links?

| RichardSEO0 -

Cloaking?

We have on the home page of our charity site a section called "recent donations" which pulls the most recent donations from a database and displays them on the website via an asp write, (equivalent of php echo) . Google is crawling these and sometimes displays them in description tags -- which looks really messy. Is there a way to hide this content without it being considered cloaking?

| Morris770 -

Should all pagination pages be included in sitemaps

How important is it for a sitemap to include all individual urls for the paginated content. Assuming the rel next and prev tags are set up would it be ok to just have the page 1 in the sitemap ?

| Saijo.George0 -

Find all old links from a site to 301

We worked on this site a while ago - http://www.electric-heatingsupplies.co.uk/ Whilst we did a big 301 redirect exercise, I wanted to check that we "got" all of them. Is there a historical way I can check all the old indexed links to make sure they correlate to the new links? Thanks!!

| lauratagdigital0 -

URL Changes And Site Map Redirects

We are working on a site redesign which will change/shorten our url structure. The primary domain will remain the same however most of the other urls on the site are getting much simpler. My question is how should this be best handled when it comes to sitemaps because there are massive amounts of URLS that will be redirected to the new shorter URL how should we best handle our sitemaps? Should a new sitemap be submitted right at launch? and the old sitemap removed later. I know that Google does not like having redirects in sitemaps. Has anyone done this on a large scale, 60k URLs or more and have any advice?

| RMATVMC0 -

AJAX and High Number Of URLS Indexed

I recently took over as the SEO for a large ecommerce site. Every Month or so our webmaster tools account is hit with a warning for a high number of URLS. In each message they send there is a sample of problematic URLS. 98% of each sample is not an actual URL on our site but is an AJAX request url that users are making. This is a server side request so the URL does not change when users make narrowing selections for items like size, color etc. Here is an example of what one of those looks like Tire?0-1.IBehaviorListener.0-border-border_body-VehicleFilter-VehicleSelectPanel-VehicleAttrsForm-Makes We have over 3 million indexed URLs according to Google because of this. We are not submitting these urls in our site maps, Google Bot is making lots of AJAX selections according to our server data. I have used the URL Handling Parameter Tool to target some of those parameters that are currently set to let Google decide and set it to "no urls" with those parameters to be indexed. I still need more time to see how effective that will be but it does seem to have slowed the number of URLs being indexed. Other notes: 1. Overall traffic to the site has been steady and even increasing. 2. Google bot crawls an average of 241000 urls each day according to our crawl stats. We are a large Ecommerce site that sells parts, accessories and apparel in the power sports industry. 3. We are using the Wicket frame work for our website. Thanks for your time.

| RMATVMC0 -

Templates for Meta Description, Good or Bad?

Hello, We have a website where users can browse photos of different categories. For each photo we are using a meta description template such as: Are you looking for a nice and cool photo? [Photo name] is the photo which might be of interest to you. And in the keywords tags we are using: [Photo name] photos, [Photo name] free photos, [Photo name] best photos. I'm wondering, is this any safe method? it's very difficult to write a manual description when you have 3,000+ photos in the database. Thanks!

| TheSEOGuy10 -

Site Navigation

Hello, I have some questions about best practices with site navigation & internal linking. I'm currently assisting aplossoftware.com with its navigation. The site has about 200 pages total. They currently have a very sparse header with a lot of links in the footer. The three most important keywords they want to rank for are nonprofit accounting software, church accounting software and file 990 online. 1. What are your thoughts about including a drop down menu in the header for the different products? (they have 3 main products). This would allow us to include a few more links in the header and give more real estate to include full keywords in anchor text. 2. They have a good blog with content that gets regularly updated. Currently it's linked in the footer and gets a tiny amount of visits. What are your thoughts about including it as a link in the header instead? 3. What are best practices with using (or not using) no follow with site navigation and footer links? How about with links to social media pages like Facebook/Twitter? Any other thoughts/ideas about the site navigation for this site (www.aplossoftware.com) would be much appreciated. Thanks!

| stageagent0 -

How can I best handle parameters?

Thank you for your help in advance! I've read a ton of posts on this forum on this subject and while they've been super helpful I still don't feel entirely confident in what the right approach I should take it. Forgive my very obvious noob questions - I'm still learning! The problem: I am launching a site (coursereport.com) which will feature a directory of schools. The directory can be filtered by a handful of fields listed below. The URL for the schools directory will be coursereport.com/schools. The directory can be filtered by a number of fields listed here: Focus (ex: “Data Science”) Cost (ex: “$<5000”) City (ex: “Chicago”) State/Province (ex: “Illinois”) Country (ex: “Canada”) When a filter is applied to the directories page the CMS produces a new page with URLs like these: coursereport.com/schools?focus=datascience&cost=$<5000&city=chicago coursereport.com/schools?cost=$>5000&city=buffalo&state=newyork My questions: 1) Is the above parameter-based approach appropriate? I’ve seen other directory sites that take a different approach (below) that would transform my examples into more “normal” urls. coursereport.com/schools?focus=datascience&cost=$<5000&city=chicago VERSUS coursereport.com/schools/focus/datascience/cost/$<5000/city/chicago (no params at all) 2) Assuming I use either approach above isn't it likely that I will have duplicative content issues? Each filter does change on page content but there could be instance where 2 different URLs with different filters applied could produce identical content (ex: focus=datascience&city=chicago OR focus=datascience&state=illinois). Do I need to specify a canonical URL to solve for that case? I understand at a high level how rel=canonical works, but I am having a hard time wrapping my head around what versions of the filtered results ought to be specified as the preferred versions. For example, would I just take all of the /schools?focus=X combinations and call that the canonical version within any filtered page that contained other additional parameters like cost or city? Should I be changing page titles for the unique filtered URLs? I read through a few google resources to try to better understand the how to best configure url params via webmaster tools. Is my best bet just to follow the advice on the article below and define the rules for each parameter there and not worry about using rel=canonical ? https://support.google.com/webmasters/answer/1235687 An assortment of the other stuff I’ve read for reference: http://www.wordtracker.com/academy/seo-clean-urls http://www.practicalecommerce.com/articles/3857-SEO-When-Product-Facets-and-Filters-Fail http://www.searchenginejournal.com/five-steps-to-seo-friendly-site-url-structure/59813/ http://googlewebmastercentral.blogspot.com/2011/07/improved-handling-of-urls-with.html

| alovallo0 -

Where to put Schema On Page

What part of my page should I put Schema data? Header? Footer? Also All pages? or just home page?

| bozzie3114 -

Is there any SEO benefit to pulling a picture from another website and linking to it from a blog?

For example, if blog.mountainmedia.com were to link a product picture directly to mountainmedia.com. Would this be considered a high quality backlink?

| MountainMedia0 -

Duplicate Titles and Sitemap rel=alternate

Hello, Does anyone know why I still have duplicate titles after crawling with moz (also google webmasters shows the same) even after I implemented (since 1 week or 2) a new sitemap with rel=alternate attribute for languges? In fact, the duplicates should be in the titles like http://socialengagement.it/su-di-me and http://socialengagement.it/en/su-di-me. The sitemap is on socialengagement.it/sitemap.xml (please note formatting somehow does not show correctly, you should see the source code to double check if its done properly. Was made by hand by me). Thanks for help! Eugenio

| socialengaged0 -

Dealing with 410 Errors in Google Webmaster Tools

Hey there! (Background) We are doing a content audit on a site with 1,000s of articles, some going back to the early 2000s. There is some content that was duplicated from other sites, does not have any external links to it and gets little or no traffic. As we weed these out we set them to 410 to let the Goog know that this is not an error, we are getting rid of them on purpose and so the Goog should too. As expected, we now see the 410 errors in the Crawl report in Google Webmaster Tools. (Question) I have been going through and "Marking as Fixed" in GWT to clear out my console of these pages, but I am wondering if it would be better to just ignore them and let them clear out of GWT on their own. They are "fixed" in the 410 way as I intended and I am betting Google means fixed as being they show a 200 (if that makes sense). Any opinions on the best way to handle this? Thx!

| CleverPhD0 -

Do multipe empty search result pages count as duplicate content?

I am writing an online application that among other things allows the users to search through our database for results. Pretty simply stuff. My question is this. When the site is starting out, there will probably be a lot of searches that will bring back empty pages since we will still be building it up. Each page will dynamically generate the title tags, description tags, H1, H2, H3 tags - so that part will be unique - but otherwise they will be almost identical empty results pages until then. Would Google Count all these empty result pages as duplicate content? Anybody have any experience with this? Thanks in advance.

| rayvensoft0 -

Moving Blog Question

Site A is my primary site. I created a blog on site B and wrote good content and gave links back to site A. I think this is causing a penalty to occur. I no longer want to update site B and want to move the entire blog and it's content to sitea.com/blog. Is this a good idea or should I just start a fresh/new sitea/blog and just remove the links from site B to site A?

| CLTMichael0 -

How a google bot sees your site

So I have stumbled across various websites like this: http://www.smart-it-consulting.com/internet/google/googlebot-spoofer/ The concept here is to be able to view your site as a googlebot sees it. However, the results are a little puzzling. Google is reading the text on my page but not the title tags according to the results. Are websites like this accurate OR does Google not read title tags and H1 tags anymore? Also on a slighly related note. I noticed the results show the navigation bar is being read first by google, is this bad and should the navigation bar be optimized for keywords as well? If it did, it would read a bit funny and the "humans" would be confused.

| StreetwiseReports0 -

Avoid Keyword Cannibalization For Geo Terms?

I'm dealing with some geographical keywords that may cause me problems. If I target a county and a city inside that county that share part of a name, will I be at risk for cannibalization? For example, if I want to target "Jasper County Adoption Attorneys" and "Jasper Park Adoption Attorneys," will I be at risk just based on cannibalization? Thanks, Ruben

| KempRugeLawGroup0 -

303 redirect for geographically targeted content

Any insight as to why Yelp does a 303 redirect, when everyone else seems to be using a 302? Does a 303 pass PR? Is a 303 preferred?

| jcgoodrich0 -

ALT attribute keyword on the same image but different pages

Hi there, As i'm sure you're probably aware, moz advises to use a keyword within the ALT attribute on pages... On a new website I am launching, I have the ability to add an alt keyword to image headers. On multiple pages we have the exact same image but with different keywords associated them inside the alt attribute. The image itself is a collage of different images and so the keywords used can, quite sneakily, match the image. My question is therefore, will using different keywords on the same image on different pages have a negative effect on SEO? Thanks, Stuart

| Stuart260 -

Google Webmaster tools: Sitemap.xml not processed everyday

Hi, We have multiple sites under our google webmaster tools account with each having a sitemap.xml submitted Each site's sitemap.xml status ( attached below ) shows it is processed everyday except for one _Sitemap: /sitemap.xml__This Sitemap was submitted Jan 10, 2012, and processed Oct 14, 2013._But except for one site ( coed.com ) for which the sitemap.xml was processed only on the day it is submitted and we have to manually resubmit every day to get it processed.Any idea on why it might?thank you

| COEDMediaGroup0

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.