Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Latest Questions

Have an SEO question? Search our Q&A forum for an answer; if not found, use your Moz Pro subscription to ask our incredible community of SEOs for help!

-

How to get 'Links to you site' via the google search console API?

hey! Any idea how I can download backlinks via the sear console API? This page from Google has a few commands but not the back links one - https://developers.google.com/apis-explorer/#p/webmasters/v3/ Has anyone collected backlinks data in the past? Apprrciate your help! Thanks Arjun

Link Building | | BaselineTry0 -

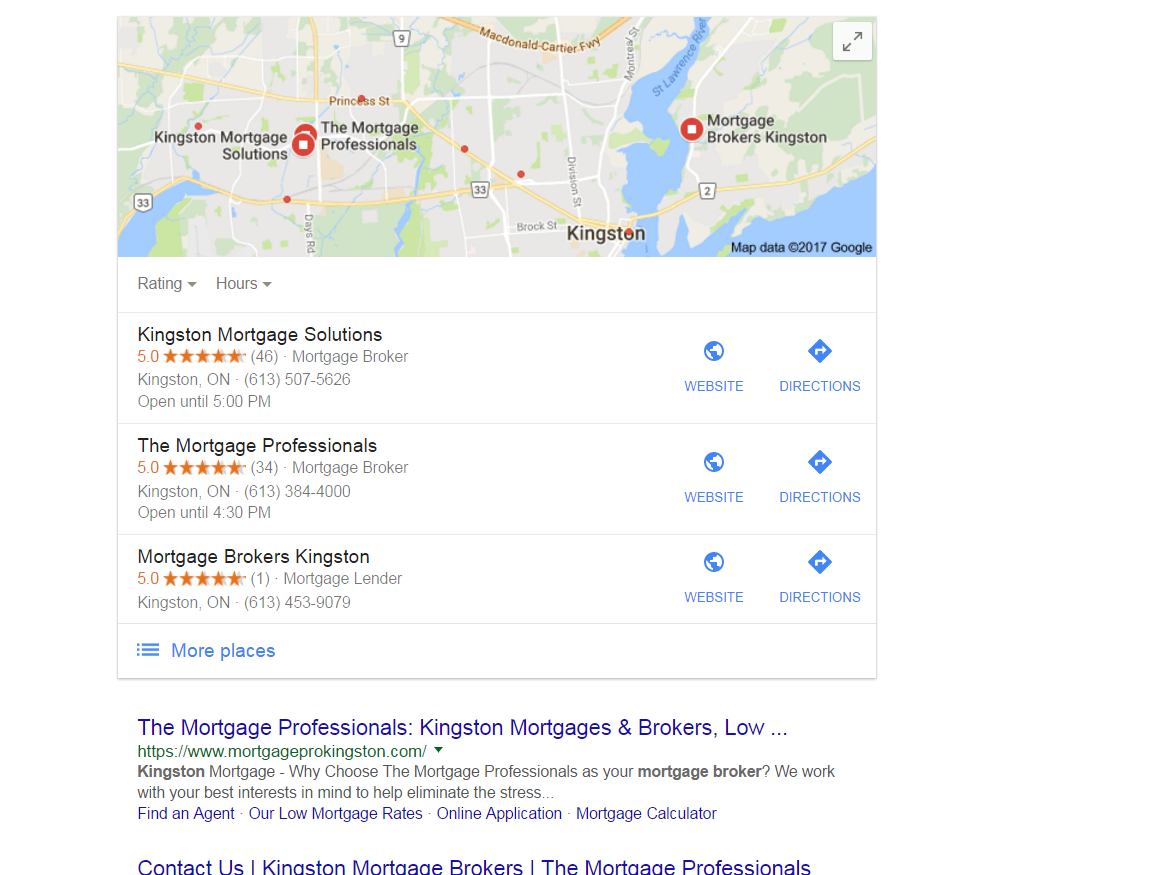

Improve Google Business ranking

While my client's websites have been ranking well in SERP for their keyterms I'm at a lost on what I can do to improve their Google business/map presence. I'm referring to their listing where the top three come up or when you search on Google Maps.

Local Listings | | FPK

https://gyazo.com/26ec78ed7f712157ec72492199545431 Ex 1. Several months ago my client was ranked #1 both for SERP and maps until they dropped to 2nd on maps. Now they're ranked 1st in search yet 2nd for local business rankings as you can see from the screenshot above. At one point my client's business did have more reviews than the 1st ranking business yet they still weren't 1st. Ex. 2. Client(s) is ranked 4th in search and doesn't show in the top 3 map listings for their search term. If you click on More places to view Google Maps they're listed all the way down as the 15th listing or worse can't even be found when searching by their main SEO key term . Of course they are found by searching for their business name so it's not like there is a problem with the listing. I make sure to: Completely fill out their Google Business profile(NAP, hours and add pictures) Have my client try to gain positive reviews Manage and respond to reviews(mainly the negative ones) Add map and Google business link to their website Can anyone offer any other insight on what else can be done to improve their local presence on maps that I might be missing?0 -

URL Parameter for Limiting Results

We have a category page that lists products. We have parameters and the default value is to limit the page to display 9 products. If the user wishes, they can view 15 products or 30 products on the same page. The parameter is ?limit=9 or ?limit=15 and so on. Google is recognizing this as duplicate meta tags and meta descriptions via HTML Suggestions. I have a couple questions. 1. What should be my goal? Is my goal to have Google crawl the page with 9 items or crawl the page with all items in the category? In Search Console, the first part of setting up a URL parameter says "Does this parameter change page content seen by the user?". In my opinion, I think the answer is Yes. Then, when I select how the parameter affects page content, I assume I'd choose Narrows because it's either narrowing or expanding the number of items displayed on the page. 2. When setting up my URL Parameters in Search Console, do I want to select Every URL or just let Googlebot decide? I'm torn because when I read about Every URL, it says this setting could result in Googlebot unnecessarily crawling duplicate content on your site (it's already doing that). When reading further, I begin to second guess the Narrowing option. Now I'm at a loss on what to do. Any advice or suggestions will be helpful! Thanks.

SERP Trends | | dkeipper0 -

Target="_blank" and referral acquisition data

Hi, Any assistance would be greatly appreciated! I have been reading up on this topic but there is some contradictory articles out there and I was hoping someone could clarify the latest practise for me. Website A links out to four of its partner websites.

Search Behavior | | AMA-DataSet

These links are using a target="_blank". When i look at the acquisition data for any of the partner websites there is zero referral data from site A.

I tested this wasn't an analytics bug by using the real time feature while clicking the partner links to see what had happened, and to also find the source, but I found that they are all being returned as a source direct. Ideally id like it to show the referrals once more for reporting purposes. Do I need to add extra google analytics parameters/functions to track the outbound links? or should this referral data be appearing correctly and it is something unrelated to the links themselves? or should I throw in the towel and drop the target blanks? Thank you in advance for your time.

Best Regards.0 -

Looking to remove dates from URL permalink structure. What do you think of this idea?

I know most people who remove dates from their URL structure usually do so and then setup a 301 redirect. I believe that's the right way to go about this typically. My biggest fear with doing a global 301 redirect implementation like that across an entire site is that I've seen cases where this has sort of shocked Google and the site took a hit in organic traffic pretty bad. Heres what I'm thinking a safer approach would be and I'd like to hear others thoughts. What if... Changed permalink structure moving forward to remove the date in future posts. All current URLs stay as is with their dates Moving forward we would go back and optimize past posts in waves (including proper 301 redirects and better URL structure). This way we avoid potentially shocking Google with a global change across all URLs. Do you know of a way this is possible with a large Wordpress website? Do you see any conplications that could come about in this process? I'd like to hear any other thoughts about this please. Thanks!

Intermediate & Advanced SEO | | HashtagJeff0 -

My "search visibility" went from 3% to 0% and I don't know why.

My search visibility on here went from 3.5% to 3.7% to 0% to 0.03% and now 0.05% in a matter of 1 month and I do not know why. I make changes every week to see if I can get higher on google results. I do well with one website which is for a medical office that has been open for years. This new one where the office has only been open a few months I am having trouble. We aren't getting calls like I am hoping we would. In fact the only one we did receive I believe is because we were closest to him in proximity on google maps. I am also having some trouble with the "Links" aspect of SEO. Everywhere I see to get linked it seems you have to pay. We are a medical office we aren't selling products so not many Blogs would want to talk about us. Any help that could assist me with getting a higher rank on google would be greatly appreciated. Also any help with getting the search visibility up would be great as well.

Intermediate & Advanced SEO | | benjaminleemd1 -

Does a "Read More" button to open up the full content affect SEO?

As we've been refining our metrics for gauging whether or not a blog is effective -- if people are engaging with it -- one of the strategies we've seen (e.g. NYT, WaPo, Yahoo!) is "Read More." I've read a few articles with some who advocate using it and others who discourage it. Does anyone have any history adding "Read More" to their content and the effect it had?

Content Development | | ReunionMarketing0 -

Spaces between Letters and Numbers SEO question

This is a fun one - Example: Mercedes Benz is pushing to have all of there vehicle models to coincide with the world branding such as the "C300" is supposed to be "C 300" and the "E300" is supposed to be "E 300"... I have a few issues here as when I use Voice Search for "Mercedes Benz C 300" there is no way (that I know of) to add a space between the number and letter. In addition, when searching for the "C 300 for sale" Google corrects the text with "Did you mean: C300 for sale". I am seeking a way to accommodate both versions of the models WITHOUT adding the both C300 and C 300...etc. to the text on web pages. OR will Google eventually change the model names over time as Mercedes-Benz regulates the new U.S. naming convention. Tough question - any thoughts? Thank you for your help -

Local SEO | | MBS-MBA0 -

Absolute vs. Relative Canonical Links

Hi Moz Community, I have a client using relative links for their canonicals (vs. absolute) Google appears to be following this just fine, but bing, etc. are still sending organic traffic to the non-canonical links. It's a drupal setup. Anyone have advice? Should I recommend that all canonical links be absolute? They are strapped for resources, so this would be a PITA if it won't make a difference. Thanks

Intermediate & Advanced SEO | | SimpleSearch1 -

MOZ not verifying my Google My Business listing

Hello Folks, Moz Local is not verifying my Google My Business Listing nor is it verifying the manual CSV upload. My Google My Business Listing and my manual CSV upload correctly display all of the same information as my Google My Business Listing and Facebook Page using the following Moz Local guidelines. The only thing that I can think of is that Moz Local is currently pulling up and older listing with a toll free number; however that has been corrected and the feed is real time, so I'm at a loss. Facebook The name, address, and phone number (NAP) listed on the page must be an exact match with the listing in Moz Local. The primary phone number listed must be a unique local number or a toll-free number. The primary page category must be “local business”. A minimum of 2 visitor check-ins are required. Not sure what to do here? Head to Facebook's resources to see how to let people check in and how you can check in. The page must be fully accessible to all potential visitors, so any restrictions based on age, location, etc. must be disabled. This can be easily checked by attempting to view the Facebook page in a Chrome incognito browser while not logged into a Facebook account; if you can view the page normally in these conditions, the accessibility should be in good shape! Google My Business The name, address, and phone number (NAP) listed on the page must be an exact match with the listing in Moz Local. The primary phone number listed must be a unique local number or a toll-free number. The business must not be listed as a “service area business”. A service area business displays with the street address being hidden, as well as the listing not being surfaced through the Google Places API (which is where we pull the listing details from). The listing must be verified by postcard. As a note, it may take a couple days after the postcard verification process for the listing to be available through the Google Places API. The listing must be completely searchable through Google Maps, with the full NAP listed correctly. Any help would be appreciated.

Moz Local | | Kodiiac0 -

How to fix: Attribute name not allowed on element meta at this point.

Hello, HTML validator brings "Attribute name not allowed on element meta at this point" for all my meta tags. Yet, as I understand, it is essential to keep meta-description for SEO, for example. I read a couple of articles on how to fix that and one of them suggests considering HTML5 custom data attribute instead of name: Do you think I should try to validate my page? And instead of ? I will appreciate your advise very much!

Technical SEO | | kirupa0 -

E-Commerce Site Collection Pages Not Being Indexed

Hello Everyone, So this is not really my strong suit but I’m going to do my best to explain the full scope of the issue and really hope someone has any insight. We have an e-commerce client (can't really share the domain) that uses Shopify; they have a large number of products categorized by Collections. The issue is when we do a site:search of our Collection Pages (site:Domain.com/Collections/) they don’t seem to be indexed. Also, not sure if it’s relevant but we also recently did an over-hall of our design. Because we haven’t been able to identify the issue here’s everything we know/have done so far: Moz Crawl Check and the Collection Pages came up. Checked Organic Landing Page Analytics (source/medium: Google) and the pages are getting traffic. Submitted the pages to Google Search Console. The URLs are listed on the sitemap.xml but when we tried to submit the Collections sitemap.xml to Google Search Console 99 were submitted but nothing came back as being indexed (like our other pages and products). We tested the URL in GSC’s robots.txt tester and it came up as being “allowed” but just in case below is the language used in our robots:

Intermediate & Advanced SEO | | Ben-R

User-agent: *

Disallow: /admin

Disallow: /cart

Disallow: /orders

Disallow: /checkout

Disallow: /9545580/checkouts

Disallow: /carts

Disallow: /account

Disallow: /collections/+

Disallow: /collections/%2B

Disallow: /collections/%2b

Disallow: /blogs/+

Disallow: /blogs/%2B

Disallow: /blogs/%2b

Disallow: /design_theme_id

Disallow: /preview_theme_id

Disallow: /preview_script_id

Disallow: /apple-app-site-association

Sitemap: https://domain.com/sitemap.xml A Google Cache:Search currently shows a collections/all page we have up that lists all of our products. Please let us know if there’s any other details we could provide that might help. Any insight or suggestions would be very much appreciated. Looking forward to hearing all of your thoughts! Thank you in advance. Best,0 -

Can a .ly domain rank in the United States?

Hello members. I have a question that I am seeking to confirm whether or not I am on the right track. I am interested in purchasing a .ly domain which is the ccTLD for Libya. The purpose of the .ly domain would be for branding purposes however at the same time I do not want to kill the websites ability to rank in Google.com (United States searches) because of this domain. Google does not consider .ly to be one of those generic ccTLDs like. io, .cc, .co, etc. that can rank and Bitly has also moved away from the .ly extension to a .com extension. Back in 2011 when there was unrest in Lybia, a few well known sites that utilized the .ly extension had their domains confiscated such as Letter.ly, Advers.ly and I think Bitly may have been on that list too however with the unrest behind us it is possible to purchase a .ly so being able to obtain one is not an issue. From what I can tell, I should be able to specify in Google Search Console that the website utilizing the .ly extension is a US based website. I can also do this with Google My Business and I will keep the Whois info public so the whois data can been seen as a US based website. Based on everything I just said do any of you think I will be OK if I were to register and use the .ly domain extension and still be able to rank in Google.com (US Searches). Confirmation would help me sleep better. Thanks in advance everyone and have a great day!!

Intermediate & Advanced SEO | | joemaresca0 -

Subdomain vs. Separate Domain for SEO & Google AdWords

We have a client who carries 4 product lines from different manufacturers under a singular domain name (www.companyname.com), and last fall, one of their manufacturers indicated that they needed to move to separate out one of those product lines from the rest, so we redesigned and relaunched as two separate sites - www.companyname.com and www.companynameseparateproduct.com (a newly-purchased domain). Since that time, their manufacturer has reneged their requirement to separate the product lines, but the client has been running both sites separately since they launched at the beginning of December 2016. Since that time, they have cannibalized their content strategy (effective February 2017) and hacked apart their PPC budget from both sites (effective April 2017), and are upset that their organic and paid traffic has correspondingly dropped from the original domain, and that the new domain hasn't continued to grow at the rate they would like it to (we did warn them, and they made the decision to move forward with the changes anyway). This past week, they decided to hire an in-house marketing manager, who is insisting that we move the newer domain (www.companynameseparateproduct.com) to become a subdomain on their original site (separateproduct.companyname.com). Our team has argued that making this change back 6 months into the life of the new site will hurt their SEO (especially if we have to 301 redirect all of the old content back again, without any new content regularly being added), which was corroborated with this article. We'd also have to kill the separate AdWords account and quality score associated with the ads in that account to move them back. We're currently looking for any extra insight or literature that we might be able to find that helps explain this to the client better - even if it is a little technical. (We're also open to finding out if this method of thinking is incorrect if things have changed!)

Local Website Optimization | | mkbeesto0 -

If my website do not have a robot.txt file, does it hurt my website ranking?

After a site audit, I find out that my website don't have a robot.txt. Does it hurt my website rankings? One more thing, when I type mywebsite.com/robot.txt, it automatically redirect to the homepage. Please help!

Intermediate & Advanced SEO | | binhlai0 -

Google My Business - Switching from Local to National Presence

Hi, Before I started with my current employer (a national B2B company), someone set them up with a Google My Business page that has resulted in the home office appearing as a local search result. As a result, our competitors have a much more professional national Knowledge Graph sidebar complete with logo, Wikipedia blurb, social links, etc. displayed while we have a local result with reviews, images, and Google Map location. Since we are a B2B business with a national presence, I am trying to transition from the local to broader company Knowledge Graph result, but I'm struggling to find information on the best steps to remove the local result. While the reviews are improving, this is a service-based business with a B2C element when it comes to end users, so historical reviews have been unkind -- to the point that I'd like to make the transition to a national presence not only to better reflect the entire region we serve, but also to remove as much review visibility as possible. The only option in Google My Business I've seen so far is to report the business as being closed, which, of course, it is not. I know a big Step 1 is to get a new Wikipedia page for the business created. (The company is legitimately deserving of one. I'm still trying to find the most effective approach to tackling this without violating Wikipedia policies. ) Outside of that step, however, is there any sort of process someone can recommend for tackling this local-to-nation Google transition? Thanks, Andrew

Reviews and Ratings | | Andrew_In_Search_of_Answers1 -

Best practice for deindexing large quantities of pages

We are trying to deindex a large quantity of pages on our site and want to know what the best practice for doing that is. For reference, the reason we are looking for methods that could help us speed it up is we have about 500,000 URLs that we want deindexed because of mis-formatted HTML code and google indexed them much faster than it is taking to unindex them unfortunately. We don't want to risk clogging up our limited crawl log/budget by submitting a sitemap of URLs that have "noindex" on them as a hack for deindexing. Although theoretically that should work, we are looking for white hat methods that are faster than "being patient and waiting it out", since that would likely take months if not years with Google's current crawl rate of our site.

Intermediate & Advanced SEO | | teddef0 -

Rate My Logo!

Hey guys, Can't for the life of me decide which color pallet to use for this logo, so please let me know your thoughts! The logo is for a website that specialises in Instagram social media marketing - So without further ado... Green, Blue or Blue with Red Heart? Thoughts, feedback and anything else you want to add! DBFnY

Branding | | camille10 -

Should I Split Into Two Websites?

I'm creating a website for a new company that offers several related services. They want to have a main corporate website that has pages for all their services. However, they want to have a second website that only features a subset of those services. So they would have the same company name, same website template, but the smaller site would have a different domain name, different text/photos on the home page and be missing some pages from the main corporate site so that site would make them look more specialized. They would have separate marketing materials (brochures, business cards) that would have the website address and email address using the different domain name. They also want the smaller second site to come up on search results related to the services for that site and not the main site. Can this be pulled off without having a significant negative effect on ranking potential for either of the two site and also not risk a duplicate content penalty? It would seem you would have to add a robots.txt file that excludes indexing of the pages on the main site that are duplicating on the smaller site. However there is a potential big issue. The company is a local business. Nowadays the local results (Map + 3-pack) are as important if not more important, than the traditional organic results below the 3-pack (although I acknowledge they are related). For their Google Business Places, since they have two websites for the same company, they can only list one of the website. So if they list the corporate site, their not going to get in the local 3-pack for their specialized site for search terms. They may be able to live with this though since the main site will show ALL services. Comments? Ideas? Issues? Strategies?

Local SEO | | DJ10270 -

How can I stop a tracking link from being indexed while still passing link equity?

I have a marketing campaign landing page and it uses a tracking URL to track clicks. The tracking links look something like this: http://this-is-the-origin-url.com/clkn/http/destination-url.com/ The problem is that Google is indexing these links as pages in the SERPs. Of course when they get indexed and then clicked, they show a 400 error because the /clkn/ link doesn't represent an actual page with content on it. The tracking link is set up to instantly 301 redirect to http://destination-url.com. Right now my dev team has blocked these links from crawlers by adding Disallow: /clkn/ in the robots.txt file, however, this blocks the flow of link equity to the destination page. How can I stop these links from being indexed without blocking the flow of link equity to the destination URL?

Technical SEO | | UnbounceVan0 -

How can I find all broken links pointing to my site?

I help manage a large website with over 20M backlinks and I want to find all of the broken ones. What would be the most efficient way to go about this besides exporting and checking each backlink's reponse code? Thank you in advance!

Intermediate & Advanced SEO | | StevenLevine3 -

I have a client in Australia that is going to set up a website that is in Chinese to service their Asian customer base (Indonesia, Singapore, HK, China). What domain should they use?

They're website is hosted on a .com.au domain. Should they host their Chinese language pages under their current domain (.com.au) using a subdirectory (i.e. /asia) or should they use another separate domain that they own that is a regular .com? Or does it really not matter?

Local Website Optimization | | 100yards1 -

What's a good WPM for a copywriter?

My copywriter is currently hitting 2,100 - 2,500 words over three articles on an average day. He is employed full time, 7.5 hours a day with a 30 minute lunch break (He has the choice of a 1 hour lunch and leaving 30 minutes later). Let's say only 6 hours are spent researching and writing: 2,500 words / 360 minutes = 6.9WPM The content is generally rewritten from other websites with a little bit of unique content, on topics that are usually not complicated - the articles themselves are along the lines of a broad summary of what the other website offers/does. The content I receive is fairly generic and doesn't really say anything more than the source material. No formatting is done and generally I receive very large wall-of-text paragraphs. The content is written in one program and then copy/pasted into word to be delivered. All keywords to use are provided, as well as ~50 words and phrases related to the topic. The ~50 words and phrases are usually presented in a list ("they offer x, x, x, x and x, as well as x, x and x" etc), so this part of the task shouldn't be taking long. I am trying to gauge whether this is typical and what I should expect from someone who does this each day, as from previous roles I know more is definitely doable, but as for whether it's doable every working day I'm not sure. What do you usually receive from your copywriters for a day of work?

Content Development | | helenlorettahasan0 -

One domain - Multiple servers

Can I have the root domain pointing to one server and other URLs on the domain pointing to another server without redirecting, domain masking or HTML masking? Dealing with an old site that is a mess. I want to avoid migrating the old website to the new environment. I want to work on a page by page and section by section basis, and whatever gets ready to go live I will release on the new server while keeping all other pages untouched and live on the old server. What are your recommendations?

Intermediate & Advanced SEO | | Joseph-Green-SEO0 -

Thought FRED penalty - Now see new spammy image backlinks what to do?

Hi, So starting about March 9 I started seeing huge losses in ranking for a client. These rankings continue to drop every week since and we changed nothing on the site. At first I thought it must be the FRED update, so we have started rewriting and adding product descriptions to our pages (which is a good thing regardless). I also checked our backlink profile using OSE on MOZ and still saw the few linking root domains we had. Another Odd thing on this is that webmasters tools showed many more domains. So today I bought a subscriptions to ahrefs and instantly saw that on the same timeline (starting March 1 2017) until now, we have literally doubled in inbound links from very spammy type sites. BUT the incoming links are not to content, people seem to be ripping off our images. So my question is, do spammy inbound image links count against us the same as if someone linked actual written content or non image urls? Is FRED something I should still be looking into? Should i disavow a list of inbound image links? Thanks in advance!

Intermediate & Advanced SEO | | plahpoy0 -

More pages or less pages for best SEO practices?

Hi all, I would like to know the community's opinion on this. A website with more pages or less pages will rank better? Websites with more pages have an advantage of more landing pages for targeted keywords. Less pages will have advantage of holding up page rank with limited pages which might impact in better ranking of pages. I know this is highly dependent. I mean to get answers for an ideal website. Thanks,

Algorithm Updates | | vtmoz1 -

Redirect to 'default' or English (/en) version of site?

Hi Moz Community! I'm trying to work through a thorny internationalization issue with the 'default' and English versions of our site. We have an international set-up of: www.domain.com (in english) www.domain.com/en www.domain.com/en-gb www.domain.com/fr-fr www.domain.com/de-de and so on... All the canonicals and HREFLANGs are set up, except the English language version is giving me pause. If you visit www.domain.com, all of the internal links on that page (due to the current way our cms works) point to www.domain.com/en/ versions of the pages. Content is identical between the two versions. The canonical on, say, www.domain.com/en/products points to www.domain.com/products. Feels like we're pulling in two different directions with our internationalization signals. Links go one way, canonical goes another. Three options I can see: Remove the /en/ version of the site. 301 all the /en versions of pages to /. Update the hreflangs to point the EN language users to the / version. **Redirect the / version of the site to /en. **The reverse of the above. **Keep both the /en and the / versions, update the links on / version. **Make it so that visitors to the / version of the site follow links that don't take them to the /en site. It feels like the /en version of the site is redundant and potentially sending confusing signals to search engines (it's currently a bit of a toss-up as to which version of a page ranks). I'm leaning toward removing the /en version and redirecting to the / version. It would be a big step as currently - due to the internal linking - about 40% of our traffic goes through the /en path. Anything to be aware of? Any recommendations or advice would be much appreciated.

International SEO | | MaxSydenham0

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.